|

| 1 | +# NLP入门 |

| 2 | + |

| 3 | +## 0 目标 |

| 4 | + |

| 5 | +- 了解啥是NLP |

| 6 | +- 了解NLP的发展简史 |

| 7 | +- 了解NLP的应用场景 |

| 8 | +- 了解本教程中的NLP |

| 9 | + |

| 10 | +## 1 啥是NLP? |

| 11 | + |

| 12 | +计算机科学与语言学中关注于计算机与人类语言间转换的领域。 |

| 13 | + |

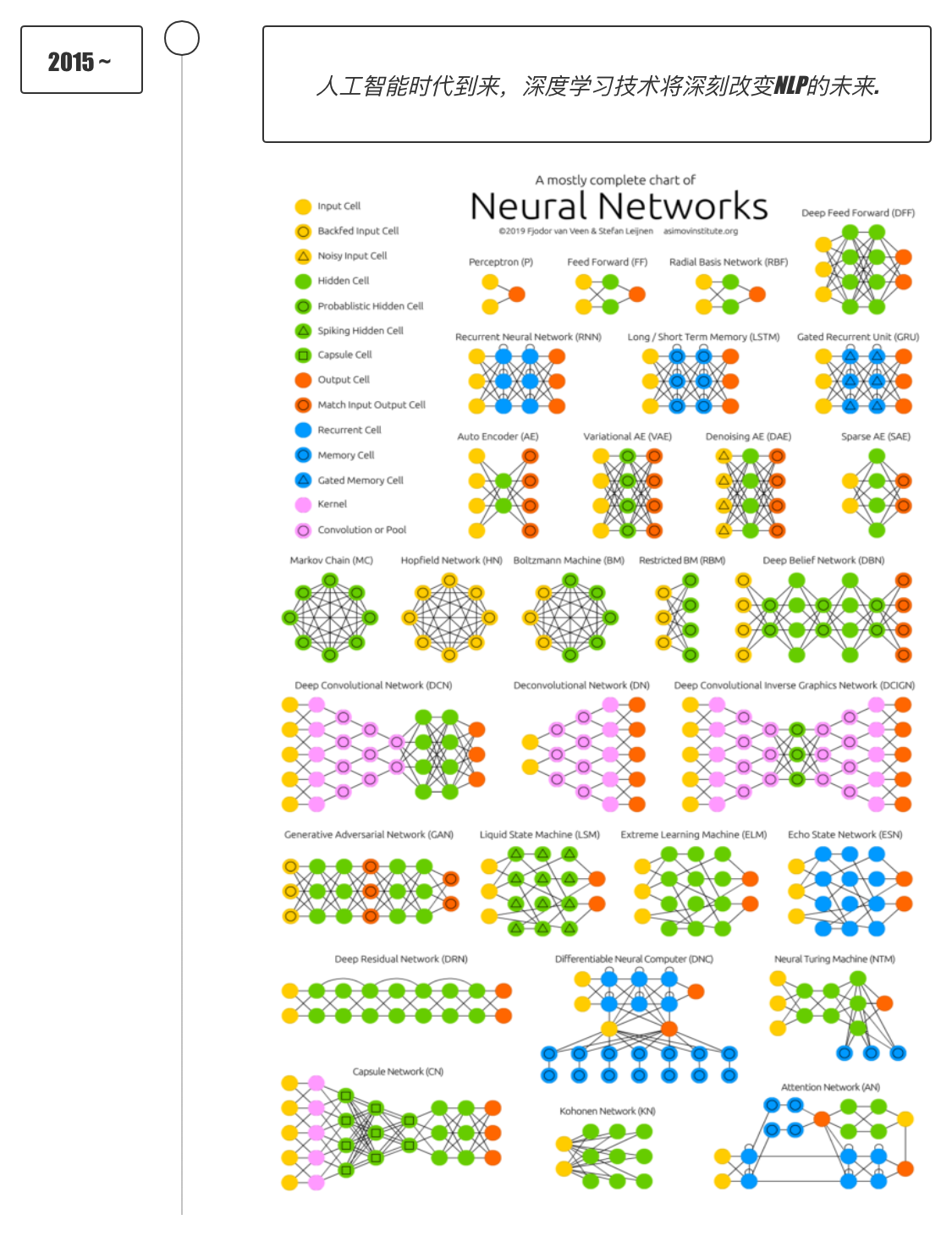

| 14 | +## 2 发展简史 |

| 15 | + |

| 16 | + |

| 17 | + |

| 18 | + |

| 19 | + |

| 20 | + |

| 21 | + |

| 22 | + |

| 23 | + |

| 24 | + |

| 25 | + |

| 26 | +## 3 应用场景 |

| 27 | + |

| 28 | +- 语音助手 |

| 29 | +- 机器翻译 |

| 30 | +- 搜索引擎 |

| 31 | +- 智能问答 |

| 32 | +- ... |

| 33 | + |

| 34 | +### 3.1 语音助手 |

| 35 | + |

| 36 | +科大讯飞语音识别技术访谈: |

| 37 | + |

| 38 | +<video src="/Volumes/mobileData/data/%E5%AD%A6%E4%B9%A0%E8%B5%84%E6%96%99/01-%E9%98%B6%E6%AE%B51-3%EF%BC%88python%E5%9F%BA%E7%A1%80%20%E3%80%81python%E9%AB%98%E7%BA%A7%E3%80%81%E6%9C%BA%E5%99%A8%E5%AD%A6%E4%B9%A0%EF%BC%89/03-%E6%B7%B1%E5%BA%A6%E5%AD%A6%E4%B9%A0%E4%B8%8ENLP/01-%E8%AE%B2%E4%B9%89/HTML/mkdocs_NLP/img/xunfei.mp4"></video> |

| 39 | + |

| 40 | +### 3.2 机器翻译 |

| 41 | + |

| 42 | +CCTV上的机器翻译系统, 让世界聊得来! |

| 43 | + |

| 44 | +<video src="/Volumes/mobileData/data/%E5%AD%A6%E4%B9%A0%E8%B5%84%E6%96%99/01-%E9%98%B6%E6%AE%B51-3%EF%BC%88python%E5%9F%BA%E7%A1%80%20%E3%80%81python%E9%AB%98%E7%BA%A7%E3%80%81%E6%9C%BA%E5%99%A8%E5%AD%A6%E4%B9%A0%EF%BC%89/03-%E6%B7%B1%E5%BA%A6%E5%AD%A6%E4%B9%A0%E4%B8%8ENLP/01-%E8%AE%B2%E4%B9%89/HTML/mkdocs_NLP/img/fanyi.mp4"></video> |

| 45 | + |

| 46 | +## 4 本专栏的NLP |

| 47 | + |

| 48 | +### 4.1 课程理念与宗旨 |

| 49 | + |

| 50 | +本系列课程将开启你的NLP之旅, 全面从企业实战角度出发, 课程设计内容对应企业开发标准流程和企业发展路径, 助力你成为一名真正的AI-NLP工程师。 |

| 51 | + |

| 52 | +### 4.2 内容先进性说明 |

| 53 | + |

| 54 | +本课程内容结合当下时代背景, 更多关注NLP在深度学习领域的进展, 这也将是未来几年甚至几十年NLP的重要发展方向, 简化传统NLP的内容, 如语言规则, 传统模型, 特征工程等, 带来效果更好, 应用更广的Transfomer, 迁移学习等先进内容。 |

| 55 | + |

| 56 | +### 4.3 内容大纲概要 |

| 57 | + |

| 58 | +| 模块名称 | 主要内容 | 案例 | |

| 59 | +| ------------ | ------------------------------------------------------------ | -------------------------------- | |

| 60 | +| 文本预处理 | 文本处理基本方法,文本张量表示、文本数据分析、文本增强方法等 | 路透社新闻类型分类任务 | |

| 61 | +| 经典序列模型 | HMM与CRF模型的作用, 使用过程, 差异比较以及发展现状等 | | |

| 62 | +| RNN及其变体 | RNN, LSTM, GRU模型的作用, 构建, 优劣势比较等 | 全球人名分类任务, 英译法翻译任务 | |

| 63 | +| Transformer | Transformer模型的作用, 细节原理解析, 模型构建过程等 | 构建基于Transformer的语言模型 | |

| 64 | +| 迁移学习 | fasttext工具的作用, 迁移学习理论, NLP标准数据集和预训练模型的使用等 | 全国酒店评论情感分析任务 | |

| 65 | + |

| 66 | +## 5 云服务器使用入门 |

| 67 | + |

| 68 | +### 5.1 基本操作 |

| 69 | + |

| 70 | +```shell |

| 71 | +# 查看cpu逻辑核 |

| 72 | +lscpu |

| 73 | +``` |

| 74 | + |

| 75 | +```text |

| 76 | +Architecture: x86_64 |

| 77 | +CPU op-mode(s): 32-bit, 64-bit |

| 78 | +Byte Order: Little Endian |

| 79 | +CPU(s): 4 |

| 80 | +On-line CPU(s) list: 0-3 |

| 81 | +Thread(s) per core: 2 |

| 82 | +Core(s) per socket: 2 |

| 83 | +座: 1 |

| 84 | +NUMA 节点: 1 |

| 85 | +厂商 ID: GenuineIntel |

| 86 | +CPU 系列: 6 |

| 87 | +型号: 85 |

| 88 | +型号名称: Intel(R) Xeon(R) Platinum 8269CY CPU @ 2.50GHz |

| 89 | +步进: 7 |

| 90 | +CPU MHz: 2500.000 |

| 91 | +BogoMIPS: 5000.00 |

| 92 | +超管理器厂商: KVM |

| 93 | +虚拟化类型: 完全 |

| 94 | +L1d 缓存: 32K |

| 95 | +L1i 缓存: 32K |

| 96 | +L2 缓存: 1024K |

| 97 | +L3 缓存: 36608K |

| 98 | +NUMA 节点0 CPU: 0-3 |

| 99 | +Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht syscall nx pdpe1gb rdtscp lm constant_tsc rep_good nopl eagerfpu pni pclmulqdq monitor ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch invpcid_single fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm mpx avx512f avx512dq rdseed adx smap avx512cd avx512bw avx512vl xsaveopt xsavec xgetbv1 arat avx512_vnni |

| 100 | +``` |

| 101 | + |

| 102 | +查看计算环境: |

| 103 | + |

| 104 | +```shell |

| 105 | +cd /home/ec2-user/ |

| 106 | +vim README |

| 107 | +``` |

| 108 | + |

| 109 | +你将看到所有的虚拟环境 |

| 110 | + |

| 111 | +```text |

| 112 | +Please use one of the following commands to start the required environment with the framework of your choice: |

| 113 | +for MXNet(+Keras2) with Python3 (CUDA 10.1 and Intel MKL-DNN) ____________________________________ source activate mxnet_p36 |

| 114 | +for MXNet(+Keras2) with Python2 (CUDA 10.1 and Intel MKL-DNN) ____________________________________ source activate mxnet_p27 |

| 115 | +for MXNet(+AWS Neuron) with Python3 ___________________________________________________ source activate aws_neuron_mxnet_p36 |

| 116 | +for TensorFlow(+Keras2) with Python3 (CUDA 10.0 and Intel MKL-DNN) __________________________ source activate tensorflow_p36 |

| 117 | +for TensorFlow(+Keras2) with Python2 (CUDA 10.0 and Intel MKL-DNN) __________________________ source activate tensorflow_p27 |

| 118 | +for TensorFlow(+AWS Neuron) with Python3 _________________________________________ source activate aws_neuron_tensorflow_p36 |

| 119 | +for TensorFlow 2(+Keras2) with Python3 (CUDA 10.1 and Intel MKL-DNN) _______________________ source activate tensorflow2_p36 |

| 120 | +for TensorFlow 2(+Keras2) with Python2 (CUDA 10.1 and Intel MKL-DNN) _______________________ source activate tensorflow2_p27 |

| 121 | +for TensorFlow 2.3 with Python3.7 (CUDA 10.2 and Intel MKL-DNN) _____________________ source activate tensorflow2_latest_p37 |

| 122 | +for PyTorch 1.4 with Python3 (CUDA 10.1 and Intel MKL) _________________________________________ source activate pytorch_p36 |

| 123 | +for PyTorch 1.4 with Python2 (CUDA 10.1 and Intel MKL) _________________________________________ source activate pytorch_p27 |

| 124 | +for PyTorch 1.6 with Python3 (CUDA 10.1 and Intel MKL) ________________________________ source activate pytorch_latest_p36 |

| 125 | +for PyTorch (+AWS Neuron) with Python3 ______________________________________________ source activate aws_neuron_pytorch_p36 |

| 126 | +for Chainer with Python2 (CUDA 10.0 and Intel iDeep) ___________________________________________ source activate chainer_p27 |

| 127 | +for Chainer with Python3 (CUDA 10.0 and Intel iDeep) ___________________________________________ source activate chainer_p36 |

| 128 | +for base Python2 (CUDA 10.0) _______________________________________________________________________ source activate python2 |

| 129 | +for base Python3 (CUDA 10.0) _______________________________________________________________________ source activate python3 |

| 130 | +``` |

| 131 | + |

| 132 | +如需用python3 + pytorch新版: |

| 133 | + |

| 134 | +```shell |

| 135 | +source activate pytorch_latest_p36 |

| 136 | +``` |

| 137 | + |

| 138 | +查看具体的python和pip版本: |

| 139 | + |

| 140 | +```shell |

| 141 | +python3 -V |

| 142 | + |

| 143 | +# 查看pip版本 |

| 144 | +pip -V |

| 145 | + |

| 146 | +# 查看重点的科学计算包,tensorflow,pytorch等 |

| 147 | +pip list |

| 148 | +``` |

| 149 | + |

| 150 | +> - 输出效果: |

| 151 | +

|

| 152 | +```text |

| 153 | +Python 3.6.10 :: Anaconda, Inc. |

| 154 | +pip 20.0.2 from /home/ec2-user/anaconda3/envs/pytorch_latest_p36/lib/python3.6/site-packages/pip (python 3.6) |

| 155 | +``` |

| 156 | + |

| 157 | +------ |

| 158 | + |

| 159 | +- 查看图数据情况: |

| 160 | + |

| 161 | +```shell |

| 162 | +# 开启图数据库,这里后期我们将重点学习的数据库 |

| 163 | +neo4j start |

| 164 | + |

| 165 | +# 关闭数据库 |

| 166 | +neo4j stop |

| 167 | +``` |

| 168 | + |

| 169 | +------ |

| 170 | + |

| 171 | +> - 输出效果: |

| 172 | +

|

| 173 | +```text |

| 174 | +Active database: graph.db |

| 175 | +Directories in use: |

| 176 | + home: /var/lib/neo4j |

| 177 | + config: /etc/neo4j |

| 178 | + logs: /var/log/neo4j |

| 179 | + plugins: /var/lib/neo4j/plugins |

| 180 | + import: /var/lib/neo4j/import |

| 181 | + data: /var/lib/neo4j/data |

| 182 | + certificates: /var/lib/neo4j/certificates |

| 183 | + run: /var/run/neo4j |

| 184 | +Starting Neo4j. |

| 185 | +Started neo4j (pid 17565). It is available at http://0.0.0.0:7474/ |

| 186 | +There may be a short delay until the server is ready. |

| 187 | +See /var/log/neo4j/neo4j.log for current status. |

| 188 | +

|

| 189 | +Stopping Neo4j.. stopped |

| 190 | +``` |

| 191 | + |

| 192 | +------ |

| 193 | + |

| 194 | +- 运行一个使用Pytorch的程序: |

| 195 | + |

| 196 | +```shell |

| 197 | +cd /data |

| 198 | + |

| 199 | +python3 pytorch_demo.py |

| 200 | +``` |

| 201 | + |

| 202 | +输出效: |

| 203 | + |

| 204 | +```text |

| 205 | +Net( |

| 206 | + (conv1): Conv2d(1, 6, kernel_size=(3, 3), stride=(1, 1)) |

| 207 | + (conv2): Conv2d(6, 16, kernel_size=(3, 3), stride=(1, 1)) |

| 208 | + (fc1): Linear(in_features=576, out_features=120, bias=True) |

| 209 | + (fc2): Linear(in_features=120, out_features=84, bias=True) |

| 210 | + (fc3): Linear(in_features=84, out_features=10, bias=True) |

| 211 | +) |

| 212 | +``` |

0 commit comments