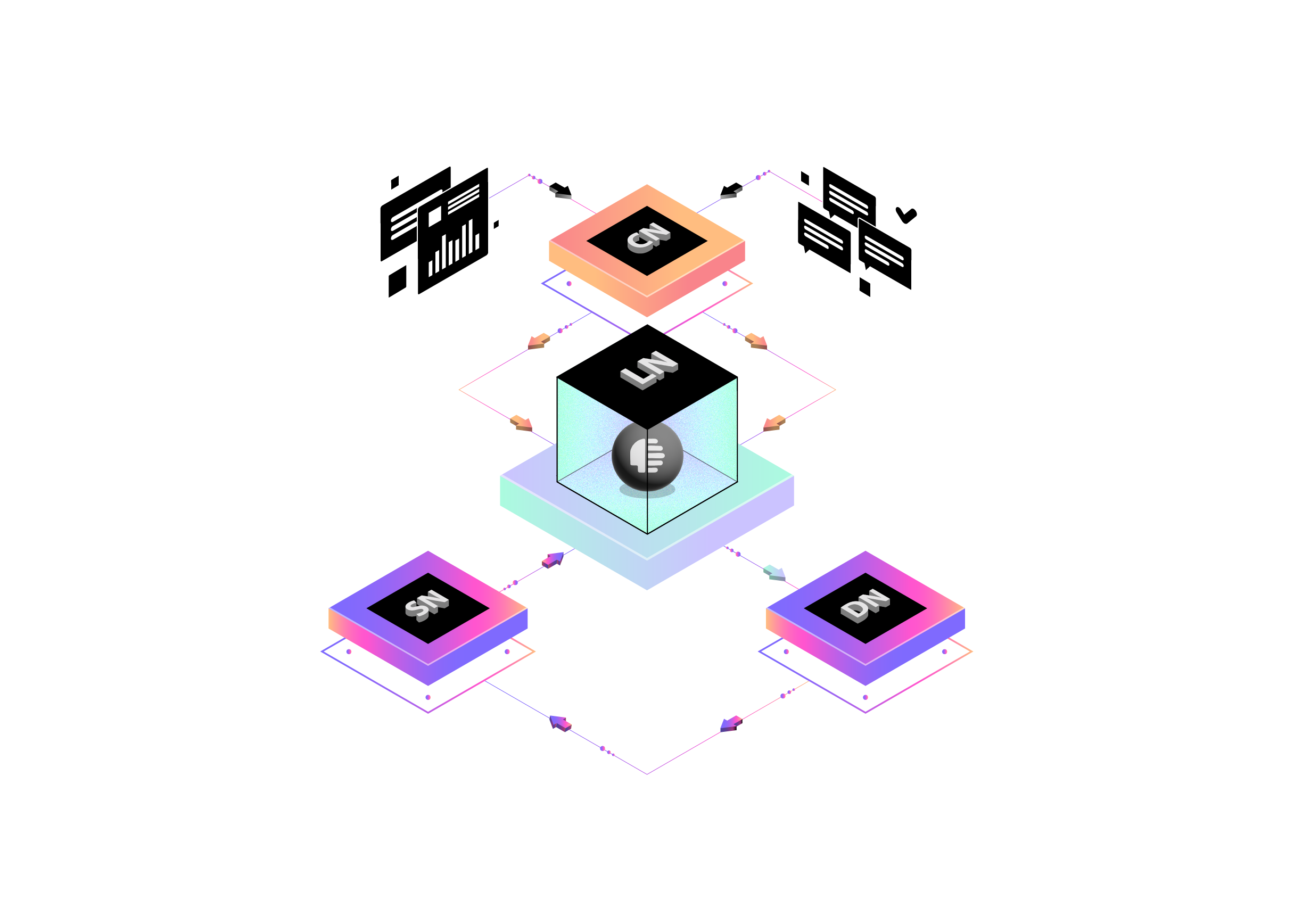

The Storage Node Network (SNN) implements a distributed high-speed cache layer functioning as network RAM for the DataHive ecosystem. Through intelligent node pairing and predictive workload scheduling, SNNs optimize data availability for connected nodes' planned operations, particularly supporting Legal Intelligence, Legal Knowledge, and Consent Intelligence model operations.

interface StorageNode {

// Core functionality

id: string;

region: string;

capacity: StorageMetrics;

status: NodeStatus;

// Node pairing

connectedNodes: Map<string, NodeConnection>;

workloadSchedule: Schedule;

// Performance metrics

latency: number;

throughput: number;

cacheHitRate: number;

}

interface NodeConnection {

nodeType: 'LN1' | 'Knowledge' | 'Consent';

modelType: ModelType;

scheduledOperations: ScheduledOperation[];

resourceAllocation: ResourceAllocation;

}Compute:

CPU: >= 32 cores

Clock Speed: >= 3.5GHz

Architecture: x86_64 or ARM64

Instructions: AVX-512 supportMemory:

RAM: >= 128GB

Type: DDR5

Speed: >= 4800MT/s

ECC: Required

Bandwidth: >= 100GB/sNetwork:

Bandwidth: >= 10Gbps dedicated

Latency: <= 5ms to nearest node

Jitter: <= 1ms

Packet Loss: <= 0.01%interface PairingManager {

// Pairing operations

establishConnection(target: DataHiveNode): Promise<ConnectionStatus>;

synchronizeSchedule(schedule: Schedule): Promise<void>;

// Resource management

allocateResources(requirements: ResourceRequirement): Promise<Allocation>;

optimizeAllocation(): Promise<OptimizationResult>;

}Schedule Configuration:

Forecast Window: 7-30 days

Update Frequency: Daily

Resource Planning: Weekly

Performance Windows: ConfigurableLN1 Support:

Pre-cache Window: 7 days

Model Updates: Scheduled

Training Data: Distributed

Inference: Real-timeKnowledge Support:

Pre-cache Window: 30 days

Base Updates: Weekly

Query Cache: Geographic

Distribution: RegionalConsent Support:

Pre-cache Window: 3 days

Response Time: <= 100ms

Data Locality: Regional

Updates: Daily- Predictive pre-caching

- Workload-based allocation

- Geographic optimization

- Load balancing

- Memory pressure handling

interface ResourceManager {

allocateMemory(requirement: MemoryRequirement): Promise<Allocation>;

optimizeUsage(): Promise<OptimizationResult>;

handlePressure(): Promise<PressureResponse>;

rebalanceLoad(): Promise<LoadBalance>;

}Key Indicators:

Cache Hit Rate: >= 95%

Response Time: <= 1ms

Memory Usage: <= 85%

Network Throughput: >= 10GB/s- Node status tracking

- Connection health

- Resource utilization

- Performance metrics

- Error rates