diff --git a/.circleci/config.yml b/.circleci/config.yml

index d8ccf68f0141..daab703b4eff 100644

--- a/.circleci/config.yml

+++ b/.circleci/config.yml

@@ -17,6 +17,4 @@ workflows:

mapping: |

.circleci/.* run-all-workflows true

gpt4all-backend/.* run-all-workflows true

- gpt4all-bindings/python/.* run-python-workflow true

- gpt4all-bindings/typescript/.* run-ts-workflow true

gpt4all-chat/.* run-chat-workflow true

diff --git a/.circleci/continue_config.yml b/.circleci/continue_config.yml

index 05c78ecab409..8adbee8e6a2a 100644

--- a/.circleci/continue_config.yml

+++ b/.circleci/continue_config.yml

@@ -8,15 +8,9 @@ parameters:

run-all-workflows:

type: boolean

default: false

- run-python-workflow:

- type: boolean

- default: false

run-chat-workflow:

type: boolean

default: false

- run-ts-workflow:

- type: boolean

- default: false

job-macos-executor: &job-macos-executor

macos:

@@ -1266,25 +1260,6 @@ jobs:

paths:

- ../.ccache

- build-ts-docs:

- docker:

- - image: cimg/base:stable

- steps:

- - checkout

- - node/install:

- node-version: "18.16"

- - run: node --version

- - run: corepack enable

- - node/install-packages:

- pkg-manager: npm

- app-dir: gpt4all-bindings/typescript

- override-ci-command: npm install --ignore-scripts

- - run:

- name: build docs ts yo

- command: |

- cd gpt4all-bindings/typescript

- npm run docs:build

-

deploy-docs:

docker:

- image: circleci/python:3.8

@@ -1295,532 +1270,17 @@ jobs:

command: |

sudo apt-get update

sudo apt-get -y install python3 python3-pip

- sudo pip3 install awscli --upgrade

- sudo pip3 install mkdocs mkdocs-material mkautodoc 'mkdocstrings[python]' markdown-captions pillow cairosvg

+ sudo pip3 install -Ur requirements-docs.txt awscli

- run:

name: Make Documentation

- command: |

- cd gpt4all-bindings/python

- mkdocs build

+ command: mkdocs build

- run:

name: Deploy Documentation

- command: |

- cd gpt4all-bindings/python

- aws s3 sync --delete site/ s3://docs.gpt4all.io/

+ command: aws s3 sync --delete site/ s3://docs.gpt4all.io/

- run:

name: Invalidate docs.gpt4all.io cloudfront

command: aws cloudfront create-invalidation --distribution-id E1STQOW63QL2OH --paths "/*"

- build-py-linux:

- machine:

- image: ubuntu-2204:current

- steps:

- - checkout

- - restore_cache:

- keys:

- - ccache-gpt4all-linux-amd64-

- - run:

- <<: *job-linux-install-backend-deps

- - run:

- name: Build C library

- no_output_timeout: 30m

- command: |

- export PATH=$PATH:/usr/local/cuda/bin

- git submodule update --init --recursive

- ccache -o "cache_dir=${PWD}/../.ccache" -o max_size=500M -p -z

- cd gpt4all-backend

- cmake -B build -G Ninja \

- -DCMAKE_BUILD_TYPE=Release \

- -DCMAKE_C_COMPILER=clang-19 \

- -DCMAKE_CXX_COMPILER=clang++-19 \

- -DCMAKE_CXX_COMPILER_AR=ar \

- -DCMAKE_CXX_COMPILER_RANLIB=ranlib \

- -DCMAKE_C_COMPILER_LAUNCHER=ccache \

- -DCMAKE_CXX_COMPILER_LAUNCHER=ccache \

- -DCMAKE_CUDA_COMPILER_LAUNCHER=ccache \

- -DKOMPUTE_OPT_DISABLE_VULKAN_VERSION_CHECK=ON \

- -DCMAKE_CUDA_ARCHITECTURES='50-virtual;52-virtual;61-virtual;70-virtual;75-virtual'

- cmake --build build -j$(nproc)

- ccache -s

- - run:

- name: Build wheel

- command: |

- cd gpt4all-bindings/python/

- python setup.py bdist_wheel --plat-name=manylinux1_x86_64

- - store_artifacts:

- path: gpt4all-bindings/python/dist

- - save_cache:

- key: ccache-gpt4all-linux-amd64-{{ epoch }}

- when: always

- paths:

- - ../.ccache

- - persist_to_workspace:

- root: gpt4all-bindings/python/dist

- paths:

- - "*.whl"

-

- build-py-macos:

- <<: *job-macos-executor

- steps:

- - checkout

- - restore_cache:

- keys:

- - ccache-gpt4all-macos-

- - run:

- <<: *job-macos-install-deps

- - run:

- name: Install dependencies

- command: |

- pip install setuptools wheel cmake

- - run:

- name: Build C library

- no_output_timeout: 30m

- command: |

- git submodule update --init # don't use --recursive because macOS doesn't use Kompute

- ccache -o "cache_dir=${PWD}/../.ccache" -o max_size=500M -p -z

- cd gpt4all-backend

- cmake -B build \

- -DCMAKE_BUILD_TYPE=Release \

- -DCMAKE_C_COMPILER=/opt/homebrew/opt/llvm/bin/clang \

- -DCMAKE_CXX_COMPILER=/opt/homebrew/opt/llvm/bin/clang++ \

- -DCMAKE_RANLIB=/usr/bin/ranlib \

- -DCMAKE_C_COMPILER_LAUNCHER=ccache \

- -DCMAKE_CXX_COMPILER_LAUNCHER=ccache \

- -DBUILD_UNIVERSAL=ON \

- -DCMAKE_OSX_DEPLOYMENT_TARGET=12.6 \

- -DGGML_METAL_MACOSX_VERSION_MIN=12.6

- cmake --build build --parallel

- ccache -s

- - run:

- name: Build wheel

- command: |

- cd gpt4all-bindings/python

- python setup.py bdist_wheel --plat-name=macosx_10_15_universal2

- - store_artifacts:

- path: gpt4all-bindings/python/dist

- - save_cache:

- key: ccache-gpt4all-macos-{{ epoch }}

- when: always

- paths:

- - ../.ccache

- - persist_to_workspace:

- root: gpt4all-bindings/python/dist

- paths:

- - "*.whl"

-

- build-py-windows:

- machine:

- image: windows-server-2022-gui:2024.04.1

- resource_class: windows.large

- shell: powershell.exe -ExecutionPolicy Bypass

- steps:

- - checkout

- - run:

- name: Update Submodules

- command: |

- git submodule sync

- git submodule update --init --recursive

- - restore_cache:

- keys:

- - ccache-gpt4all-win-amd64-

- - run:

- name: Install dependencies

- command:

- choco install -y ccache cmake ninja wget --installargs 'ADD_CMAKE_TO_PATH=System'

- - run:

- name: Install VulkanSDK

- command: |

- wget.exe "https://sdk.lunarg.com/sdk/download/1.3.261.1/windows/VulkanSDK-1.3.261.1-Installer.exe"

- .\VulkanSDK-1.3.261.1-Installer.exe --accept-licenses --default-answer --confirm-command install

- - run:

- name: Install CUDA Toolkit

- command: |

- wget.exe "https://developer.download.nvidia.com/compute/cuda/11.8.0/network_installers/cuda_11.8.0_windows_network.exe"

- .\cuda_11.8.0_windows_network.exe -s cudart_11.8 nvcc_11.8 cublas_11.8 cublas_dev_11.8

- - run:

- name: Install Python dependencies

- command: pip install setuptools wheel cmake

- - run:

- name: Build C library

- no_output_timeout: 30m

- command: |

- $vsInstallPath = & "C:\Program Files (x86)\Microsoft Visual Studio\Installer\vswhere.exe" -property installationpath

- Import-Module "${vsInstallPath}\Common7\Tools\Microsoft.VisualStudio.DevShell.dll"

- Enter-VsDevShell -VsInstallPath "$vsInstallPath" -SkipAutomaticLocation -DevCmdArguments '-arch=x64 -no_logo'

-

- $Env:PATH += ";C:\VulkanSDK\1.3.261.1\bin"

- $Env:VULKAN_SDK = "C:\VulkanSDK\1.3.261.1"

- ccache -o "cache_dir=${pwd}\..\.ccache" -o max_size=500M -p -z

- cd gpt4all-backend

- cmake -B build -G Ninja `

- -DCMAKE_BUILD_TYPE=Release `

- -DCMAKE_C_COMPILER_LAUNCHER=ccache `

- -DCMAKE_CXX_COMPILER_LAUNCHER=ccache `

- -DCMAKE_CUDA_COMPILER_LAUNCHER=ccache `

- -DKOMPUTE_OPT_DISABLE_VULKAN_VERSION_CHECK=ON `

- -DCMAKE_CUDA_ARCHITECTURES='50-virtual;52-virtual;61-virtual;70-virtual;75-virtual'

- cmake --build build --parallel

- ccache -s

- - run:

- name: Build wheel

- command: |

- cd gpt4all-bindings/python

- python setup.py bdist_wheel --plat-name=win_amd64

- - store_artifacts:

- path: gpt4all-bindings/python/dist

- - save_cache:

- key: ccache-gpt4all-win-amd64-{{ epoch }}

- when: always

- paths:

- - ..\.ccache

- - persist_to_workspace:

- root: gpt4all-bindings/python/dist

- paths:

- - "*.whl"

-

- deploy-wheels:

- docker:

- - image: circleci/python:3.8

- steps:

- - setup_remote_docker

- - attach_workspace:

- at: /tmp/workspace

- - run:

- name: Install dependencies

- command: |

- sudo apt-get update

- sudo apt-get install -y build-essential cmake

- pip install setuptools wheel twine

- - run:

- name: Upload Python package

- command: |

- twine upload /tmp/workspace/*.whl --username __token__ --password $PYPI_CRED

- - store_artifacts:

- path: /tmp/workspace

-

- build-bindings-backend-linux:

- machine:

- image: ubuntu-2204:current

- steps:

- - checkout

- - run:

- name: Update Submodules

- command: |

- git submodule sync

- git submodule update --init --recursive

- - restore_cache:

- keys:

- - ccache-gpt4all-linux-amd64-

- - run:

- <<: *job-linux-install-backend-deps

- - run:

- name: Build Libraries

- no_output_timeout: 30m

- command: |

- export PATH=$PATH:/usr/local/cuda/bin

- ccache -o "cache_dir=${PWD}/../.ccache" -o max_size=500M -p -z

- cd gpt4all-backend

- mkdir -p runtimes/build

- cd runtimes/build

- cmake ../.. -G Ninja \

- -DCMAKE_BUILD_TYPE=Release \

- -DCMAKE_C_COMPILER=clang-19 \

- -DCMAKE_CXX_COMPILER=clang++-19 \

- -DCMAKE_CXX_COMPILER_AR=ar \

- -DCMAKE_CXX_COMPILER_RANLIB=ranlib \

- -DCMAKE_BUILD_TYPE=Release \

- -DCMAKE_C_COMPILER_LAUNCHER=ccache \

- -DCMAKE_CXX_COMPILER_LAUNCHER=ccache \

- -DCMAKE_CUDA_COMPILER_LAUNCHER=ccache \

- -DKOMPUTE_OPT_DISABLE_VULKAN_VERSION_CHECK=ON

- cmake --build . -j$(nproc)

- ccache -s

- mkdir ../linux-x64

- cp -L *.so ../linux-x64 # otherwise persist_to_workspace seems to mess symlinks

- - save_cache:

- key: ccache-gpt4all-linux-amd64-{{ epoch }}

- when: always

- paths:

- - ../.ccache

- - persist_to_workspace:

- root: gpt4all-backend

- paths:

- - runtimes/linux-x64/*.so

-

- build-bindings-backend-macos:

- <<: *job-macos-executor

- steps:

- - checkout

- - run:

- name: Update Submodules

- command: |

- git submodule sync

- git submodule update --init --recursive

- - restore_cache:

- keys:

- - ccache-gpt4all-macos-

- - run:

- <<: *job-macos-install-deps

- - run:

- name: Build Libraries

- no_output_timeout: 30m

- command: |

- ccache -o "cache_dir=${PWD}/../.ccache" -o max_size=500M -p -z

- cd gpt4all-backend

- mkdir -p runtimes/build

- cd runtimes/build

- cmake ../.. \

- -DCMAKE_BUILD_TYPE=Release \

- -DCMAKE_C_COMPILER=/opt/homebrew/opt/llvm/bin/clang \

- -DCMAKE_CXX_COMPILER=/opt/homebrew/opt/llvm/bin/clang++ \

- -DCMAKE_RANLIB=/usr/bin/ranlib \

- -DCMAKE_C_COMPILER_LAUNCHER=ccache \

- -DCMAKE_CXX_COMPILER_LAUNCHER=ccache \

- -DBUILD_UNIVERSAL=ON \

- -DCMAKE_OSX_DEPLOYMENT_TARGET=12.6 \

- -DGGML_METAL_MACOSX_VERSION_MIN=12.6

- cmake --build . --parallel

- ccache -s

- mkdir ../osx-x64

- cp -L *.dylib ../osx-x64

- cp ../../llama.cpp-mainline/*.metal ../osx-x64

- ls ../osx-x64

- - save_cache:

- key: ccache-gpt4all-macos-{{ epoch }}

- when: always

- paths:

- - ../.ccache

- - persist_to_workspace:

- root: gpt4all-backend

- paths:

- - runtimes/osx-x64/*.dylib

- - runtimes/osx-x64/*.metal

-

- build-bindings-backend-windows:

- machine:

- image: windows-server-2022-gui:2024.04.1

- resource_class: windows.large

- shell: powershell.exe -ExecutionPolicy Bypass

- steps:

- - checkout

- - run:

- name: Update Submodules

- command: |

- git submodule sync

- git submodule update --init --recursive

- - restore_cache:

- keys:

- - ccache-gpt4all-win-amd64-

- - run:

- name: Install dependencies

- command: |

- choco install -y ccache cmake ninja wget --installargs 'ADD_CMAKE_TO_PATH=System'

- - run:

- name: Install VulkanSDK

- command: |

- wget.exe "https://sdk.lunarg.com/sdk/download/1.3.261.1/windows/VulkanSDK-1.3.261.1-Installer.exe"

- .\VulkanSDK-1.3.261.1-Installer.exe --accept-licenses --default-answer --confirm-command install

- - run:

- name: Install CUDA Toolkit

- command: |

- wget.exe "https://developer.download.nvidia.com/compute/cuda/11.8.0/network_installers/cuda_11.8.0_windows_network.exe"

- .\cuda_11.8.0_windows_network.exe -s cudart_11.8 nvcc_11.8 cublas_11.8 cublas_dev_11.8

- - run:

- name: Build Libraries

- no_output_timeout: 30m

- command: |

- $vsInstallPath = & "C:\Program Files (x86)\Microsoft Visual Studio\Installer\vswhere.exe" -property installationpath

- Import-Module "${vsInstallPath}\Common7\Tools\Microsoft.VisualStudio.DevShell.dll"

- Enter-VsDevShell -VsInstallPath "$vsInstallPath" -SkipAutomaticLocation -DevCmdArguments '-arch=x64 -no_logo'

-

- $Env:Path += ";C:\VulkanSDK\1.3.261.1\bin"

- $Env:VULKAN_SDK = "C:\VulkanSDK\1.3.261.1"

- ccache -o "cache_dir=${pwd}\..\.ccache" -o max_size=500M -p -z

- cd gpt4all-backend

- mkdir runtimes/win-x64_msvc

- cd runtimes/win-x64_msvc

- cmake -S ../.. -B . -G Ninja `

- -DCMAKE_BUILD_TYPE=Release `

- -DCMAKE_C_COMPILER_LAUNCHER=ccache `

- -DCMAKE_CXX_COMPILER_LAUNCHER=ccache `

- -DCMAKE_CUDA_COMPILER_LAUNCHER=ccache `

- -DKOMPUTE_OPT_DISABLE_VULKAN_VERSION_CHECK=ON

- cmake --build . --parallel

- ccache -s

- cp bin/Release/*.dll .

- - save_cache:

- key: ccache-gpt4all-win-amd64-{{ epoch }}

- when: always

- paths:

- - ..\.ccache

- - persist_to_workspace:

- root: gpt4all-backend

- paths:

- - runtimes/win-x64_msvc/*.dll

-

- build-nodejs-linux:

- docker:

- - image: cimg/base:stable

- steps:

- - checkout

- - attach_workspace:

- at: /tmp/gpt4all-backend

- - node/install:

- install-yarn: true

- node-version: "18.16"

- - run: node --version

- - run: corepack enable

- - node/install-packages:

- app-dir: gpt4all-bindings/typescript

- pkg-manager: yarn

- override-ci-command: yarn install

- - run:

- command: |

- cd gpt4all-bindings/typescript

- yarn prebuildify -t 18.16.0 --napi

- - run:

- command: |

- mkdir -p gpt4all-backend/prebuilds/linux-x64

- mkdir -p gpt4all-backend/runtimes/linux-x64

- cp /tmp/gpt4all-backend/runtimes/linux-x64/*-*.so gpt4all-backend/runtimes/linux-x64

- cp gpt4all-bindings/typescript/prebuilds/linux-x64/*.node gpt4all-backend/prebuilds/linux-x64

- - persist_to_workspace:

- root: gpt4all-backend

- paths:

- - prebuilds/linux-x64/*.node

- - runtimes/linux-x64/*-*.so

-

- build-nodejs-macos:

- <<: *job-macos-executor

- steps:

- - checkout

- - attach_workspace:

- at: /tmp/gpt4all-backend

- - node/install:

- install-yarn: true

- node-version: "18.16"

- - run: node --version

- - run: corepack enable

- - node/install-packages:

- app-dir: gpt4all-bindings/typescript

- pkg-manager: yarn

- override-ci-command: yarn install

- - run:

- command: |

- cd gpt4all-bindings/typescript

- yarn prebuildify -t 18.16.0 --napi

- - run:

- name: "Persisting all necessary things to workspace"

- command: |

- mkdir -p gpt4all-backend/prebuilds/darwin-x64

- mkdir -p gpt4all-backend/runtimes/darwin

- cp /tmp/gpt4all-backend/runtimes/osx-x64/*-*.* gpt4all-backend/runtimes/darwin

- cp gpt4all-bindings/typescript/prebuilds/darwin-x64/*.node gpt4all-backend/prebuilds/darwin-x64

- - persist_to_workspace:

- root: gpt4all-backend

- paths:

- - prebuilds/darwin-x64/*.node

- - runtimes/darwin/*-*.*

-

- build-nodejs-windows:

- executor:

- name: win/default

- size: large

- shell: powershell.exe -ExecutionPolicy Bypass

- steps:

- - checkout

- - attach_workspace:

- at: /tmp/gpt4all-backend

- - run: choco install wget -y

- - run:

- command: |

- wget.exe "https://nodejs.org/dist/v18.16.0/node-v18.16.0-x86.msi" -P C:\Users\circleci\Downloads\

- MsiExec.exe /i C:\Users\circleci\Downloads\node-v18.16.0-x86.msi /qn

- - run:

- command: |

- Start-Process powershell -verb runAs -Args "-start GeneralProfile"

- nvm install 18.16.0

- nvm use 18.16.0

- - run: node --version

- - run: corepack enable

- - run:

- command: |

- npm install -g yarn

- cd gpt4all-bindings/typescript

- yarn install

- - run:

- command: |

- cd gpt4all-bindings/typescript

- yarn prebuildify -t 18.16.0 --napi

- - run:

- command: |

- mkdir -p gpt4all-backend/prebuilds/win32-x64

- mkdir -p gpt4all-backend/runtimes/win32-x64

- cp /tmp/gpt4all-backend/runtimes/win-x64_msvc/*-*.dll gpt4all-backend/runtimes/win32-x64

- cp gpt4all-bindings/typescript/prebuilds/win32-x64/*.node gpt4all-backend/prebuilds/win32-x64

-

- - persist_to_workspace:

- root: gpt4all-backend

- paths:

- - prebuilds/win32-x64/*.node

- - runtimes/win32-x64/*-*.dll

-

- deploy-npm-pkg:

- docker:

- - image: cimg/base:stable

- steps:

- - attach_workspace:

- at: /tmp/gpt4all-backend

- - checkout

- - node/install:

- install-yarn: true

- node-version: "18.16"

- - run: node --version

- - run: corepack enable

- - run:

- command: |

- cd gpt4all-bindings/typescript

- # excluding llmodel. nodejs bindings dont need llmodel.dll

- mkdir -p runtimes/win32-x64/native

- mkdir -p prebuilds/win32-x64/

- cp /tmp/gpt4all-backend/runtimes/win-x64_msvc/*-*.dll runtimes/win32-x64/native/

- cp /tmp/gpt4all-backend/prebuilds/win32-x64/*.node prebuilds/win32-x64/

-

- mkdir -p runtimes/linux-x64/native

- mkdir -p prebuilds/linux-x64/

- cp /tmp/gpt4all-backend/runtimes/linux-x64/*-*.so runtimes/linux-x64/native/

- cp /tmp/gpt4all-backend/prebuilds/linux-x64/*.node prebuilds/linux-x64/

-

- # darwin has univeral runtime libraries

- mkdir -p runtimes/darwin/native

- mkdir -p prebuilds/darwin-x64/

-

- cp /tmp/gpt4all-backend/runtimes/darwin/*-*.* runtimes/darwin/native/

-

- cp /tmp/gpt4all-backend/prebuilds/darwin-x64/*.node prebuilds/darwin-x64/

-

- # Fallback build if user is not on above prebuilds

- mv -f binding.ci.gyp binding.gyp

-

- mkdir gpt4all-backend

- cd ../../gpt4all-backend

- mv llmodel.h llmodel.cpp llmodel_c.cpp llmodel_c.h sysinfo.h dlhandle.h ../gpt4all-bindings/typescript/gpt4all-backend/

-

- # Test install

- - node/install-packages:

- app-dir: gpt4all-bindings/typescript

- pkg-manager: yarn

- override-ci-command: yarn install

- - run:

- command: |

- cd gpt4all-bindings/typescript

- yarn run test

- - run:

- command: |

- cd gpt4all-bindings/typescript

- npm set //registry.npmjs.org/:_authToken=$NPM_TOKEN

- npm publish

-

# only run a job on the main branch

job_only_main: &job_only_main

filters:

@@ -1849,8 +1309,6 @@ workflows:

not:

or:

- << pipeline.parameters.run-all-workflows >>

- - << pipeline.parameters.run-python-workflow >>

- - << pipeline.parameters.run-ts-workflow >>

- << pipeline.parameters.run-chat-workflow >>

- equal: [ << pipeline.trigger_source >>, scheduled_pipeline ]

jobs:

@@ -2079,87 +1537,9 @@ workflows:

when:

and:

- equal: [ << pipeline.git.branch >>, main ]

- - or:

- - << pipeline.parameters.run-all-workflows >>

- - << pipeline.parameters.run-python-workflow >>

+ - << pipeline.parameters.run-all-workflows >>

- not:

equal: [ << pipeline.trigger_source >>, scheduled_pipeline ]

jobs:

- deploy-docs:

context: gpt4all

- build-python:

- when:

- and:

- - or: [ << pipeline.parameters.run-all-workflows >>, << pipeline.parameters.run-python-workflow >> ]

- - not:

- equal: [ << pipeline.trigger_source >>, scheduled_pipeline ]

- jobs:

- - pypi-hold:

- <<: *job_only_main

- type: approval

- - hold:

- type: approval

- - build-py-linux:

- requires:

- - hold

- - build-py-macos:

- requires:

- - hold

- - build-py-windows:

- requires:

- - hold

- - deploy-wheels:

- <<: *job_only_main

- context: gpt4all

- requires:

- - pypi-hold

- - build-py-windows

- - build-py-linux

- - build-py-macos

- build-bindings:

- when:

- and:

- - or: [ << pipeline.parameters.run-all-workflows >>, << pipeline.parameters.run-ts-workflow >> ]

- - not:

- equal: [ << pipeline.trigger_source >>, scheduled_pipeline ]

- jobs:

- - backend-hold:

- type: approval

- - nodejs-hold:

- type: approval

- - npm-hold:

- <<: *job_only_main

- type: approval

- - docs-hold:

- type: approval

- - build-bindings-backend-linux:

- requires:

- - backend-hold

- - build-bindings-backend-macos:

- requires:

- - backend-hold

- - build-bindings-backend-windows:

- requires:

- - backend-hold

- - build-nodejs-linux:

- requires:

- - nodejs-hold

- - build-bindings-backend-linux

- - build-nodejs-windows:

- requires:

- - nodejs-hold

- - build-bindings-backend-windows

- - build-nodejs-macos:

- requires:

- - nodejs-hold

- - build-bindings-backend-macos

- - build-ts-docs:

- requires:

- - docs-hold

- - deploy-npm-pkg:

- <<: *job_only_main

- requires:

- - npm-hold

- - build-nodejs-linux

- - build-nodejs-windows

- - build-nodejs-macos

diff --git a/.codespellrc b/.codespellrc

index 0f401f6bed3b..9a625e32a452 100644

--- a/.codespellrc

+++ b/.codespellrc

@@ -1,3 +1,3 @@

[codespell]

-ignore-words-list = blong, afterall, assistent, crasher, requestor

+ignore-words-list = blong, afterall, assistent, crasher, requestor, nam

skip = ./.git,./gpt4all-chat/translations,*.pdf,*.svg,*.lock

diff --git a/.github/ISSUE_TEMPLATE/bindings-bug.md b/.github/ISSUE_TEMPLATE/bindings-bug.md

deleted file mode 100644

index cbf0d49dd51b..000000000000

--- a/.github/ISSUE_TEMPLATE/bindings-bug.md

+++ /dev/null

@@ -1,35 +0,0 @@

----

-name: "\U0001F6E0 Bindings Bug Report"

-about: A bug report for the GPT4All Bindings

-labels: ["bindings", "bug-unconfirmed"]

----

-

-

-

-### Bug Report

-

-

-

-### Example Code

-

-

-

-### Steps to Reproduce

-

-

-

-1.

-2.

-3.

-

-### Expected Behavior

-

-

-

-### Your Environment

-

-- Bindings version (e.g. "Version" from `pip show gpt4all`):

-- Operating System:

-- Chat model used (if applicable):

-

-

diff --git a/.gitmodules b/.gitmodules

index 82388e151a69..6afea7f0f6c5 100644

--- a/.gitmodules

+++ b/.gitmodules

@@ -1,16 +1,16 @@

-[submodule "llama.cpp-mainline"]

- path = gpt4all-backend/deps/llama.cpp-mainline

+[submodule "gpt4all-backend-old/deps/llama.cpp-mainline"]

+ path = gpt4all-backend-old/deps/llama.cpp-mainline

url = https://github.com/nomic-ai/llama.cpp.git

- branch = master

+ branch = master

[submodule "gpt4all-chat/usearch"]

path = gpt4all-chat/deps/usearch

url = https://github.com/nomic-ai/usearch.git

[submodule "gpt4all-chat/deps/SingleApplication"]

path = gpt4all-chat/deps/SingleApplication

url = https://github.com/nomic-ai/SingleApplication.git

-[submodule "gpt4all-chat/deps/fmt"]

- path = gpt4all-chat/deps/fmt

- url = https://github.com/fmtlib/fmt.git

+[submodule "deps/fmt"]

+ path = deps/fmt

+ url = https://github.com/nomic-ai/fmt.git

[submodule "gpt4all-chat/deps/DuckX"]

path = gpt4all-chat/deps/DuckX

url = https://github.com/nomic-ai/DuckX.git

@@ -23,3 +23,12 @@

[submodule "gpt4all-chat/deps/json"]

path = gpt4all-chat/deps/json

url = https://github.com/nlohmann/json.git

+[submodule "gpt4all-backend/deps/qcoro"]

+ path = deps/qcoro

+ url = https://github.com/nomic-ai/qcoro.git

+[submodule "gpt4all-backend/deps/date"]

+ path = gpt4all-backend/deps/date

+ url = https://github.com/HowardHinnant/date.git

+[submodule "gpt4all-chat/deps/generator"]

+ path = gpt4all-chat/deps/generator

+ url = https://github.com/TartanLlama/generator.git

diff --git a/MAINTAINERS.md b/MAINTAINERS.md

index 6838a0b82583..6907aa1e79e0 100644

--- a/MAINTAINERS.md

+++ b/MAINTAINERS.md

@@ -29,13 +29,6 @@ Jared Van Bortel ([@cebtenzzre](https://github.com/cebtenzzre))

- —

@@ -42,12 +42,12 @@ GPT4All is made possible by our compute partner

- —

—

- —

@@ -74,24 +74,6 @@ See the full [System Requirements](gpt4all-chat/system_requirements.md) for more

-## Install GPT4All Python

-

-`gpt4all` gives you access to LLMs with our Python client around [`llama.cpp`](https://github.com/ggerganov/llama.cpp) implementations.

-

-Nomic contributes to open source software like [`llama.cpp`](https://github.com/ggerganov/llama.cpp) to make LLMs accessible and efficient **for all**.

-

-```bash

-pip install gpt4all

-```

-

-```python

-from gpt4all import GPT4All

-model = GPT4All("Meta-Llama-3-8B-Instruct.Q4_0.gguf") # downloads / loads a 4.66GB LLM

-with model.chat_session():

- print(model.generate("How can I run LLMs efficiently on my laptop?", max_tokens=1024))

-```

-

-

## Integrations

:parrot::link: [Langchain](https://python.langchain.com/v0.2/docs/integrations/providers/gpt4all/)

@@ -119,7 +101,7 @@ Please see CONTRIBUTING.md and follow the issues, bug reports, and PR markdown t

Check project discord, with project owners, or through existing issues/PRs to avoid duplicate work.

Please make sure to tag all of the above with relevant project identifiers or your contribution could potentially get lost.

-Example tags: `backend`, `bindings`, `python-bindings`, `documentation`, etc.

+Example tags: `backend`, `documentation`, etc.

## Citation

diff --git a/common/common.cmake b/common/common.cmake

index b8b6e969a357..d307d8861ae9 100644

--- a/common/common.cmake

+++ b/common/common.cmake

@@ -1,3 +1,8 @@

+# enable color diagnostics with ninja

+if (CMAKE_GENERATOR STREQUAL Ninja AND CMAKE_CXX_COMPILER_ID MATCHES "GNU|Clang")

+ add_compile_options(-fdiagnostics-color=always)

+endif()

+

function(gpt4all_add_warning_options target)

if (MSVC)

return()

diff --git a/deps/CMakeLists.txt b/deps/CMakeLists.txt

new file mode 100644

index 000000000000..6888ac289ba8

--- /dev/null

+++ b/deps/CMakeLists.txt

@@ -0,0 +1,22 @@

+set(BUILD_SHARED_LIBS OFF)

+

+set(FMT_INSTALL OFF)

+add_subdirectory(fmt)

+

+set(BUILD_TESTING OFF)

+set(QCORO_BUILD_EXAMPLES OFF)

+set(QCORO_WITH_QTDBUS OFF)

+set(QCORO_WITH_QTWEBSOCKETS OFF)

+set(QCORO_WITH_QTQUICK OFF)

+set(QCORO_WITH_QTTEST OFF)

+add_subdirectory(qcoro)

+

+set(GPT4ALL_BOOST_TAG 1.87.0)

+FetchContent_Declare(

+ boost

+ URL "https://github.com/boostorg/boost/releases/download/boost-${GPT4ALL_BOOST_TAG}/boost-${GPT4ALL_BOOST_TAG}-cmake.tar.xz"

+ URL_HASH "SHA256=7da75f171837577a52bbf217e17f8ea576c7c246e4594d617bfde7fafd408be5"

+)

+

+set(BOOST_INCLUDE_LIBRARIES json describe system)

+FetchContent_MakeAvailable(boost)

diff --git a/deps/fmt b/deps/fmt

new file mode 160000

index 000000000000..1d7302c89a08

--- /dev/null

+++ b/deps/fmt

@@ -0,0 +1 @@

+Subproject commit 1d7302c89a08a7ff0d656ae887b573c3430e7a90

diff --git a/deps/qcoro b/deps/qcoro

new file mode 160000

index 000000000000..4405cc1ee8d9

--- /dev/null

+++ b/deps/qcoro

@@ -0,0 +1 @@

+Subproject commit 4405cc1ee8d9fae6dcd0dd4aed26429ea9b3fc21

diff --git a/gpt4all-bindings/python/docs/assets/add.png b/docs/assets/add.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/add.png

rename to docs/assets/add.png

diff --git a/gpt4all-bindings/python/docs/assets/add_model_gpt4.png b/docs/assets/add_model_gpt4.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/add_model_gpt4.png

rename to docs/assets/add_model_gpt4.png

diff --git a/gpt4all-bindings/python/docs/assets/attach_spreadsheet.png b/docs/assets/attach_spreadsheet.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/attach_spreadsheet.png

rename to docs/assets/attach_spreadsheet.png

diff --git a/gpt4all-bindings/python/docs/assets/baelor.png b/docs/assets/baelor.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/baelor.png

rename to docs/assets/baelor.png

diff --git a/gpt4all-bindings/python/docs/assets/before_first_chat.png b/docs/assets/before_first_chat.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/before_first_chat.png

rename to docs/assets/before_first_chat.png

diff --git a/gpt4all-bindings/python/docs/assets/chat_window.png b/docs/assets/chat_window.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/chat_window.png

rename to docs/assets/chat_window.png

diff --git a/gpt4all-bindings/python/docs/assets/closed_chat_panel.png b/docs/assets/closed_chat_panel.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/closed_chat_panel.png

rename to docs/assets/closed_chat_panel.png

diff --git a/gpt4all-bindings/python/docs/assets/configure_doc_collection.png b/docs/assets/configure_doc_collection.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/configure_doc_collection.png

rename to docs/assets/configure_doc_collection.png

diff --git a/gpt4all-bindings/python/docs/assets/disney_spreadsheet.png b/docs/assets/disney_spreadsheet.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/disney_spreadsheet.png

rename to docs/assets/disney_spreadsheet.png

diff --git a/gpt4all-bindings/python/docs/assets/download.png b/docs/assets/download.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/download.png

rename to docs/assets/download.png

diff --git a/gpt4all-bindings/python/docs/assets/download_llama.png b/docs/assets/download_llama.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/download_llama.png

rename to docs/assets/download_llama.png

diff --git a/gpt4all-bindings/python/docs/assets/explore.png b/docs/assets/explore.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/explore.png

rename to docs/assets/explore.png

diff --git a/gpt4all-bindings/python/docs/assets/explore_models.png b/docs/assets/explore_models.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/explore_models.png

rename to docs/assets/explore_models.png

diff --git a/gpt4all-bindings/python/docs/assets/favicon.ico b/docs/assets/favicon.ico

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/favicon.ico

rename to docs/assets/favicon.ico

diff --git a/gpt4all-bindings/python/docs/assets/good_tyrion.png b/docs/assets/good_tyrion.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/good_tyrion.png

rename to docs/assets/good_tyrion.png

diff --git a/gpt4all-bindings/python/docs/assets/got_docs_ready.png b/docs/assets/got_docs_ready.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/got_docs_ready.png

rename to docs/assets/got_docs_ready.png

diff --git a/gpt4all-bindings/python/docs/assets/got_done.png b/docs/assets/got_done.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/got_done.png

rename to docs/assets/got_done.png

diff --git a/gpt4all-bindings/python/docs/assets/gpt4all_home.png b/docs/assets/gpt4all_home.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/gpt4all_home.png

rename to docs/assets/gpt4all_home.png

diff --git a/gpt4all-bindings/python/docs/assets/gpt4all_xlsx_attachment.mp4 b/docs/assets/gpt4all_xlsx_attachment.mp4

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/gpt4all_xlsx_attachment.mp4

rename to docs/assets/gpt4all_xlsx_attachment.mp4

diff --git a/gpt4all-bindings/python/docs/assets/installed_models.png b/docs/assets/installed_models.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/installed_models.png

rename to docs/assets/installed_models.png

diff --git a/gpt4all-bindings/python/docs/assets/linux.png b/docs/assets/linux.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/linux.png

rename to docs/assets/linux.png

diff --git a/gpt4all-bindings/python/docs/assets/local_embed.gif b/docs/assets/local_embed.gif

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/local_embed.gif

rename to docs/assets/local_embed.gif

diff --git a/gpt4all-bindings/python/docs/assets/mac.png b/docs/assets/mac.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/mac.png

rename to docs/assets/mac.png

diff --git a/gpt4all-bindings/python/docs/assets/models_page_icon.png b/docs/assets/models_page_icon.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/models_page_icon.png

rename to docs/assets/models_page_icon.png

diff --git a/gpt4all-bindings/python/docs/assets/new_docs_annotated.png b/docs/assets/new_docs_annotated.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/new_docs_annotated.png

rename to docs/assets/new_docs_annotated.png

diff --git a/gpt4all-bindings/python/docs/assets/new_docs_annotated_filled.png b/docs/assets/new_docs_annotated_filled.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/new_docs_annotated_filled.png

rename to docs/assets/new_docs_annotated_filled.png

diff --git a/gpt4all-bindings/python/docs/assets/new_first_chat.png b/docs/assets/new_first_chat.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/new_first_chat.png

rename to docs/assets/new_first_chat.png

diff --git a/gpt4all-bindings/python/docs/assets/no_docs.png b/docs/assets/no_docs.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/no_docs.png

rename to docs/assets/no_docs.png

diff --git a/gpt4all-bindings/python/docs/assets/no_models.png b/docs/assets/no_models.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/no_models.png

rename to docs/assets/no_models.png

diff --git a/gpt4all-bindings/python/docs/assets/no_models_tiny.png b/docs/assets/no_models_tiny.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/no_models_tiny.png

rename to docs/assets/no_models_tiny.png

diff --git a/gpt4all-bindings/python/docs/assets/nomic.png b/docs/assets/nomic.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/nomic.png

rename to docs/assets/nomic.png

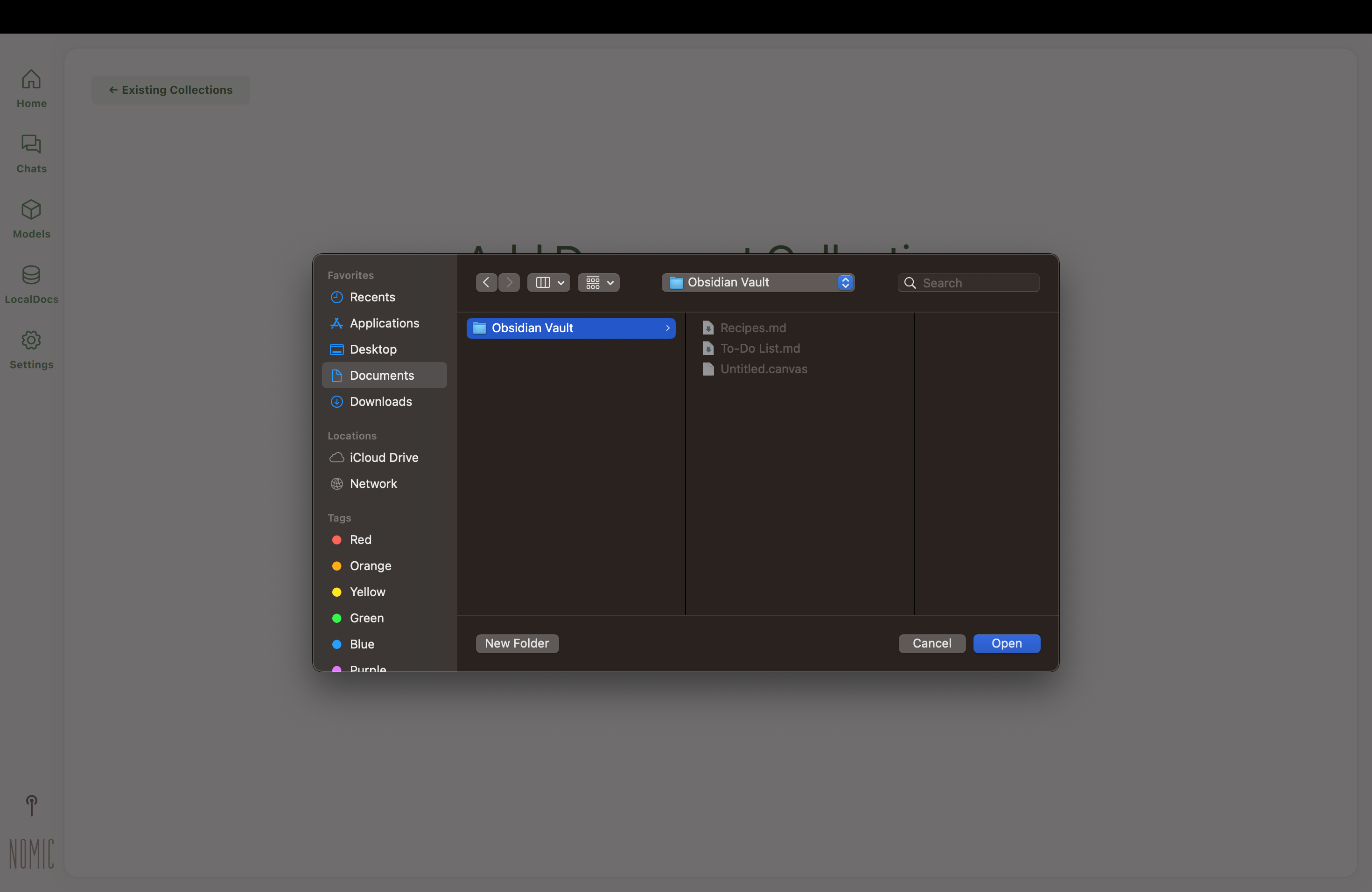

diff --git a/gpt4all-bindings/python/docs/assets/obsidian_adding_collection.png b/docs/assets/obsidian_adding_collection.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/obsidian_adding_collection.png

rename to docs/assets/obsidian_adding_collection.png

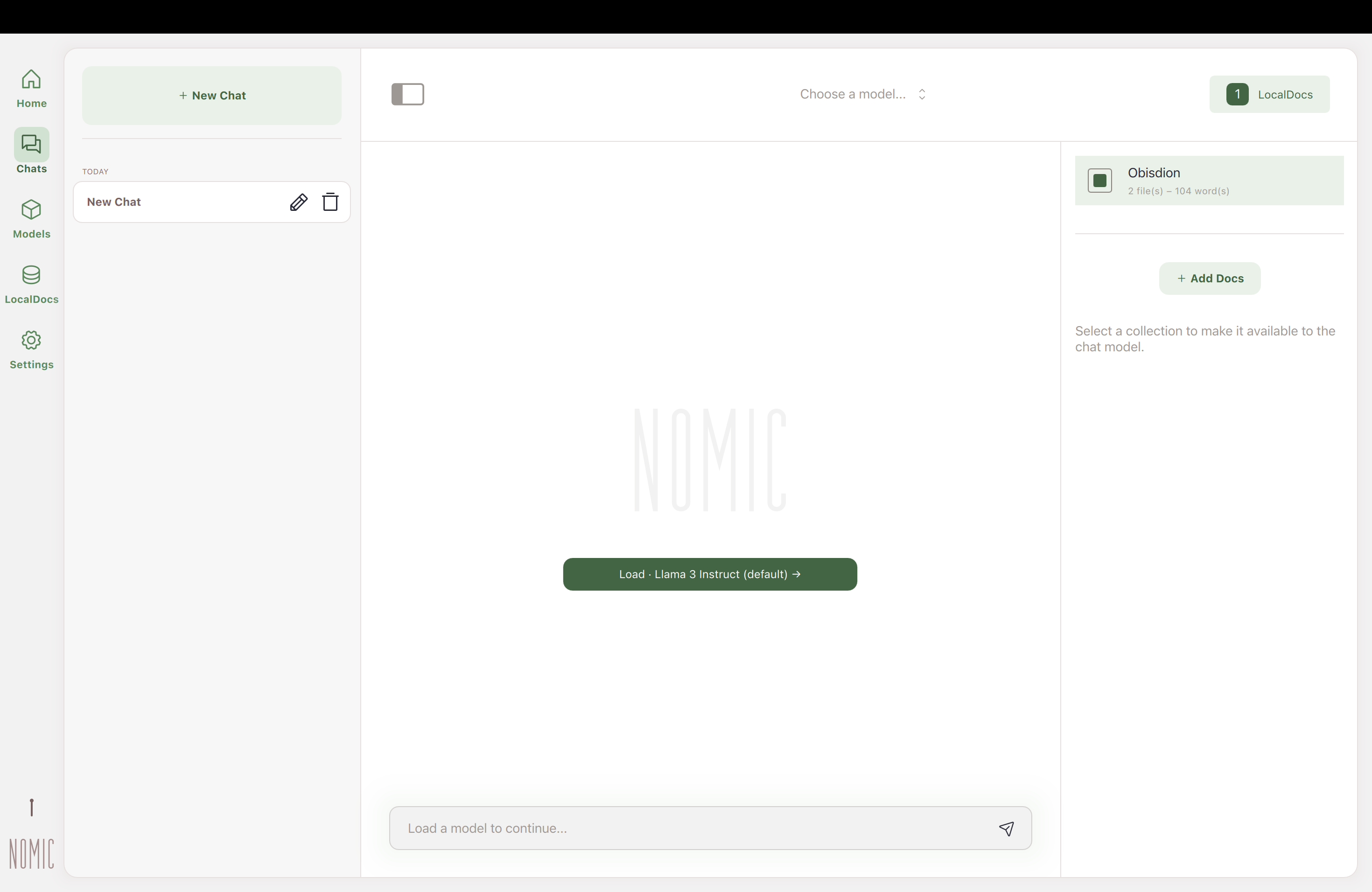

diff --git a/gpt4all-bindings/python/docs/assets/obsidian_docs.png b/docs/assets/obsidian_docs.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/obsidian_docs.png

rename to docs/assets/obsidian_docs.png

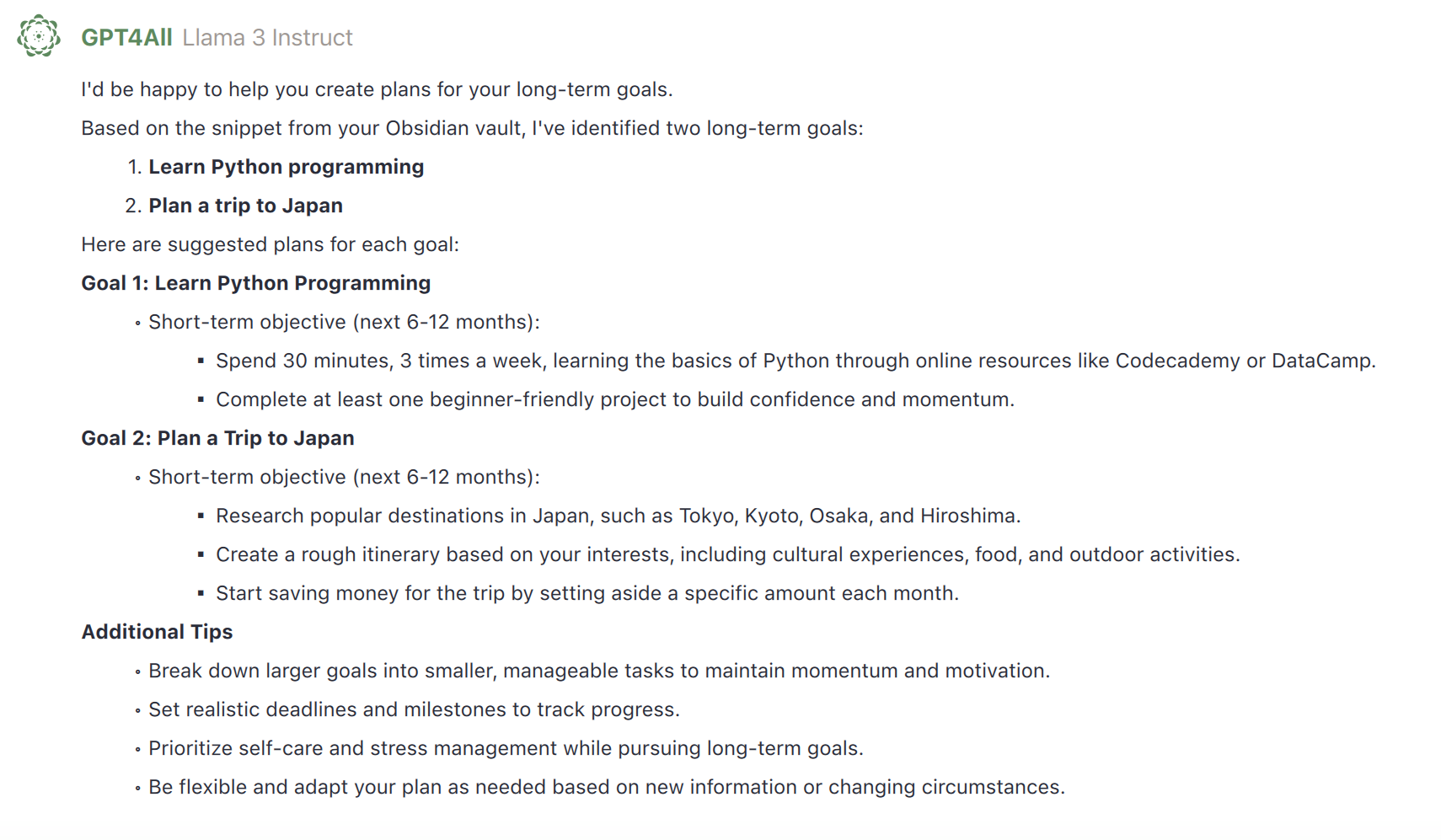

diff --git a/gpt4all-bindings/python/docs/assets/obsidian_response.png b/docs/assets/obsidian_response.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/obsidian_response.png

rename to docs/assets/obsidian_response.png

diff --git a/gpt4all-bindings/python/docs/assets/obsidian_sources.png b/docs/assets/obsidian_sources.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/obsidian_sources.png

rename to docs/assets/obsidian_sources.png

diff --git a/gpt4all-bindings/python/docs/assets/open_chat_panel.png b/docs/assets/open_chat_panel.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/open_chat_panel.png

rename to docs/assets/open_chat_panel.png

diff --git a/gpt4all-bindings/python/docs/assets/open_local_docs.png b/docs/assets/open_local_docs.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/open_local_docs.png

rename to docs/assets/open_local_docs.png

diff --git a/gpt4all-bindings/python/docs/assets/open_sources.png b/docs/assets/open_sources.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/open_sources.png

rename to docs/assets/open_sources.png

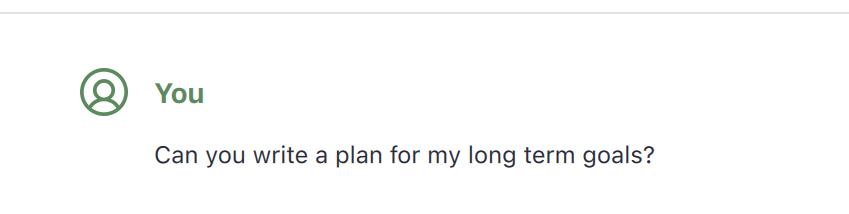

diff --git a/gpt4all-bindings/python/docs/assets/osbsidian_user_interaction.png b/docs/assets/osbsidian_user_interaction.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/osbsidian_user_interaction.png

rename to docs/assets/osbsidian_user_interaction.png

diff --git a/gpt4all-bindings/python/docs/assets/search_mistral.png b/docs/assets/search_mistral.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/search_mistral.png

rename to docs/assets/search_mistral.png

diff --git a/gpt4all-bindings/python/docs/assets/search_settings.png b/docs/assets/search_settings.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/search_settings.png

rename to docs/assets/search_settings.png

diff --git a/gpt4all-bindings/python/docs/assets/spreadsheet_chat.png b/docs/assets/spreadsheet_chat.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/spreadsheet_chat.png

rename to docs/assets/spreadsheet_chat.png

diff --git a/gpt4all-bindings/python/docs/assets/syrio_snippets.png b/docs/assets/syrio_snippets.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/syrio_snippets.png

rename to docs/assets/syrio_snippets.png

diff --git a/gpt4all-bindings/python/docs/assets/three_model_options.png b/docs/assets/three_model_options.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/three_model_options.png

rename to docs/assets/three_model_options.png

diff --git a/gpt4all-bindings/python/docs/assets/ubuntu.svg b/docs/assets/ubuntu.svg

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/ubuntu.svg

rename to docs/assets/ubuntu.svg

diff --git a/gpt4all-bindings/python/docs/assets/windows.png b/docs/assets/windows.png

similarity index 100%

rename from gpt4all-bindings/python/docs/assets/windows.png

rename to docs/assets/windows.png

diff --git a/gpt4all-bindings/python/docs/css/custom.css b/docs/css/custom.css

similarity index 100%

rename from gpt4all-bindings/python/docs/css/custom.css

rename to docs/css/custom.css

diff --git a/gpt4all-bindings/python/docs/gpt4all_api_server/home.md b/docs/gpt4all_api_server/home.md

similarity index 100%

rename from gpt4all-bindings/python/docs/gpt4all_api_server/home.md

rename to docs/gpt4all_api_server/home.md

diff --git a/gpt4all-bindings/python/docs/gpt4all_desktop/chat_templates.md b/docs/gpt4all_desktop/chat_templates.md

similarity index 100%

rename from gpt4all-bindings/python/docs/gpt4all_desktop/chat_templates.md

rename to docs/gpt4all_desktop/chat_templates.md

diff --git a/gpt4all-bindings/python/docs/gpt4all_desktop/chats.md b/docs/gpt4all_desktop/chats.md

similarity index 100%

rename from gpt4all-bindings/python/docs/gpt4all_desktop/chats.md

rename to docs/gpt4all_desktop/chats.md

diff --git a/gpt4all-bindings/python/docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-Obsidian.md b/docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-Obsidian.md

similarity index 82%

rename from gpt4all-bindings/python/docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-Obsidian.md

rename to docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-Obsidian.md

index 2660c38d8e64..1bf128d0627c 100644

--- a/gpt4all-bindings/python/docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-Obsidian.md

+++ b/docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-Obsidian.md

@@ -46,7 +46,7 @@ Obsidian for Desktop is a powerful management and note-taking software designed

-

@@ -65,7 +65,7 @@ Obsidian for Desktop is a powerful management and note-taking software designed

-

@@ -76,7 +76,7 @@ Obsidian for Desktop is a powerful management and note-taking software designed

-

@@ -84,7 +84,7 @@ Obsidian for Desktop is a powerful management and note-taking software designed

-

@@ -96,7 +96,7 @@ Obsidian for Desktop is a powerful management and note-taking software designed

-

@@ -104,6 +104,3 @@ Obsidian for Desktop is a powerful management and note-taking software designed

## How It Works

Obsidian for Desktop syncs your Obsidian notes to your computer, while LocalDocs integrates these files into your LLM chats using embedding models. These models find semantically similar snippets from your files to enhance the context of your interactions.

-

-To learn more about embedding models and explore further, refer to the [Nomic Python SDK documentation](https://docs.nomic.ai/atlas/capabilities/embeddings).

-

diff --git a/gpt4all-bindings/python/docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-One-Drive.md b/docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-One-Drive.md

similarity index 100%

rename from gpt4all-bindings/python/docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-One-Drive.md

rename to docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-One-Drive.md

diff --git a/gpt4all-bindings/python/docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-google-drive.md b/docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-google-drive.md

similarity index 100%

rename from gpt4all-bindings/python/docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-google-drive.md

rename to docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-google-drive.md

diff --git a/gpt4all-bindings/python/docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-microsoft-excel.md b/docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-microsoft-excel.md

similarity index 100%

rename from gpt4all-bindings/python/docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-microsoft-excel.md

rename to docs/gpt4all_desktop/cookbook/use-local-ai-models-to-privately-chat-with-microsoft-excel.md

diff --git a/gpt4all-bindings/python/docs/gpt4all_desktop/localdocs.md b/docs/gpt4all_desktop/localdocs.md

similarity index 93%

rename from gpt4all-bindings/python/docs/gpt4all_desktop/localdocs.md

rename to docs/gpt4all_desktop/localdocs.md

index c3290a92e6b5..906279ade52d 100644

--- a/gpt4all-bindings/python/docs/gpt4all_desktop/localdocs.md

+++ b/docs/gpt4all_desktop/localdocs.md

@@ -44,5 +44,3 @@ LocalDocs brings the information you have from files on-device into your LLM cha

## How It Works

A LocalDocs collection uses Nomic AI's free and fast on-device embedding models to index your folder into text snippets that each get an **embedding vector**. These vectors allow us to find snippets from your files that are semantically similar to the questions and prompts you enter in your chats. We then include those semantically similar snippets in the prompt to the LLM.

-

-To try the embedding models yourself, we recommend using the [Nomic Python SDK](https://docs.nomic.ai/atlas/capabilities/embeddings)

diff --git a/gpt4all-bindings/python/docs/gpt4all_desktop/models.md b/docs/gpt4all_desktop/models.md

similarity index 100%

rename from gpt4all-bindings/python/docs/gpt4all_desktop/models.md

rename to docs/gpt4all_desktop/models.md

diff --git a/gpt4all-bindings/python/docs/gpt4all_desktop/quickstart.md b/docs/gpt4all_desktop/quickstart.md

similarity index 100%

rename from gpt4all-bindings/python/docs/gpt4all_desktop/quickstart.md

rename to docs/gpt4all_desktop/quickstart.md

diff --git a/gpt4all-bindings/python/docs/gpt4all_desktop/settings.md b/docs/gpt4all_desktop/settings.md

similarity index 98%

rename from gpt4all-bindings/python/docs/gpt4all_desktop/settings.md

rename to docs/gpt4all_desktop/settings.md

index e9d5eb8531ec..d48fe06975ec 100644

--- a/gpt4all-bindings/python/docs/gpt4all_desktop/settings.md

+++ b/docs/gpt4all_desktop/settings.md

@@ -49,7 +49,6 @@ You can **clone** an existing model, which allows you to save a configuration of

|----------------------------|------------------------------------------|-----------|

| **Context Length** | Maximum length of input sequence in tokens | 2048 |

| **Max Length** | Maximum length of response in tokens | 4096 |

- | **Prompt Batch Size** | Token batch size for parallel processing | 128 |

| **Temperature** | Lower temperature gives more likely generations | 0.7 |

| **Top P** | Prevents choosing highly unlikely tokens | 0.4 |

| **Top K** | Size of selection pool for tokens | 40 |

diff --git a/gpt4all-bindings/python/docs/gpt4all_help/faq.md b/docs/gpt4all_help/faq.md

similarity index 51%

rename from gpt4all-bindings/python/docs/gpt4all_help/faq.md

rename to docs/gpt4all_help/faq.md

index c94b0d04898d..eb12bb101c13 100644

--- a/gpt4all-bindings/python/docs/gpt4all_help/faq.md

+++ b/docs/gpt4all_help/faq.md

@@ -6,32 +6,16 @@

We support models with a `llama.cpp` implementation which have been uploaded to [HuggingFace](https://huggingface.co/).

-### Which embedding models are supported?

-

-We support SBert and Nomic Embed Text v1 & v1.5.

-

## Software

### What software do I need?

All you need is to [install GPT4all](../index.md) onto you Windows, Mac, or Linux computer.

-### Which SDK languages are supported?

-

-Our SDK is in Python for usability, but these are light bindings around [`llama.cpp`](https://github.com/ggerganov/llama.cpp) implementations that we contribute to for efficiency and accessibility on everyday computers.

-

### Is there an API?

Yes, you can run your model in server-mode with our [OpenAI-compatible API](https://platform.openai.com/docs/api-reference/completions), which you can configure in [settings](../gpt4all_desktop/settings.md#application-settings)

-### Can I monitor a GPT4All deployment?

-

-Yes, GPT4All [integrates](../gpt4all_python/monitoring.md) with [OpenLIT](https://github.com/openlit/openlit) so you can deploy LLMs with user interactions and hardware usage automatically monitored for full observability.

-

-### Is there a command line interface (CLI)?

-

-[Yes](https://github.com/nomic-ai/gpt4all/tree/main/gpt4all-bindings/cli), we have a lightweight use of the Python client as a CLI. We welcome further contributions!

-

## Hardware

### What hardware do I need?

diff --git a/gpt4all-bindings/python/docs/gpt4all_help/troubleshooting.md b/docs/gpt4all_help/troubleshooting.md

similarity index 97%

rename from gpt4all-bindings/python/docs/gpt4all_help/troubleshooting.md

rename to docs/gpt4all_help/troubleshooting.md

index ba1326165cd4..da5ac2611d8f 100644

--- a/gpt4all-bindings/python/docs/gpt4all_help/troubleshooting.md

+++ b/docs/gpt4all_help/troubleshooting.md

@@ -2,7 +2,7 @@

## Error Loading Models

-It is possible you are trying to load a model from HuggingFace whose weights are not compatible with our [backend](https://github.com/nomic-ai/gpt4all/tree/main/gpt4all-bindings).

+It is possible you are trying to load a model from HuggingFace whose weights are not compatible with our [backend](https://github.com/nomic-ai/gpt4all/tree/main/gpt4all-backend).

Try downloading one of the officially supported models listed on the main models page in the application. If the problem persists, please share your experience on our [Discord](https://discord.com/channels/1076964370942267462).

diff --git a/gpt4all-bindings/python/docs/index.md b/docs/index.md

similarity index 54%

rename from gpt4all-bindings/python/docs/index.md

rename to docs/index.md

index 0b200bf43a5b..19b9ef73cdeb 100644

--- a/gpt4all-bindings/python/docs/index.md

+++ b/docs/index.md

@@ -12,17 +12,3 @@ No API calls or GPUs required - you can just download the application and [get s

[Download for Mac](https://gpt4all.io/installers/gpt4all-installer-darwin.dmg)

[Download for Linux](https://gpt4all.io/installers/gpt4all-installer-linux.run)

-

-!!! note "Python SDK"

- Use GPT4All in Python to program with LLMs implemented with the [`llama.cpp`](https://github.com/ggerganov/llama.cpp) backend and [Nomic's C backend](https://github.com/nomic-ai/gpt4all/tree/main/gpt4all-backend). Nomic contributes to open source software like [`llama.cpp`](https://github.com/ggerganov/llama.cpp) to make LLMs accessible and efficient **for all**.

-

- ```bash

- pip install gpt4all

- ```

-

- ```python

- from gpt4all import GPT4All

- model = GPT4All("Meta-Llama-3-8B-Instruct.Q4_0.gguf") # downloads / loads a 4.66GB LLM

- with model.chat_session():

- print(model.generate("How can I run LLMs efficiently on my laptop?", max_tokens=1024))

- ```

diff --git a/gpt4all-bindings/python/docs/old/gpt4all_chat.md b/docs/old/gpt4all_chat.md

similarity index 100%

rename from gpt4all-bindings/python/docs/old/gpt4all_chat.md

rename to docs/old/gpt4all_chat.md

diff --git a/gpt4all-backend-old/CMakeLists.txt b/gpt4all-backend-old/CMakeLists.txt

new file mode 100644

index 000000000000..91d314f7a017

--- /dev/null

+++ b/gpt4all-backend-old/CMakeLists.txt

@@ -0,0 +1,189 @@

+cmake_minimum_required(VERSION 3.23) # for FILE_SET

+

+include(../common/common.cmake)

+

+set(CMAKE_WINDOWS_EXPORT_ALL_SYMBOLS ON)

+set(CMAKE_EXPORT_COMPILE_COMMANDS ON)

+

+if (APPLE)

+ option(BUILD_UNIVERSAL "Build a Universal binary on macOS" ON)

+else()

+ option(LLMODEL_KOMPUTE "llmodel: use Kompute" ON)

+ option(LLMODEL_VULKAN "llmodel: use Vulkan" OFF)

+ option(LLMODEL_CUDA "llmodel: use CUDA" ON)

+ option(LLMODEL_ROCM "llmodel: use ROCm" OFF)

+endif()

+

+if (APPLE)

+ if (BUILD_UNIVERSAL)

+ # Build a Universal binary on macOS

+ # This requires that the found Qt library is compiled as Universal binaries.

+ set(CMAKE_OSX_ARCHITECTURES "arm64;x86_64" CACHE STRING "" FORCE)

+ else()

+ # Build for the host architecture on macOS

+ if (NOT CMAKE_OSX_ARCHITECTURES)

+ set(CMAKE_OSX_ARCHITECTURES "${CMAKE_HOST_SYSTEM_PROCESSOR}" CACHE STRING "" FORCE)

+ endif()

+ endif()

+endif()

+

+# Include the binary directory for the generated header file

+include_directories("${CMAKE_CURRENT_BINARY_DIR}")

+

+set(LLMODEL_VERSION_MAJOR 0)

+set(LLMODEL_VERSION_MINOR 5)

+set(LLMODEL_VERSION_PATCH 0)

+set(LLMODEL_VERSION "${LLMODEL_VERSION_MAJOR}.${LLMODEL_VERSION_MINOR}.${LLMODEL_VERSION_PATCH}")

+project(llmodel VERSION ${LLMODEL_VERSION} LANGUAGES CXX C)

+

+set(CMAKE_CXX_STANDARD 23)

+set(CMAKE_CXX_STANDARD_REQUIRED ON)

+set(CMAKE_LIBRARY_OUTPUT_DIRECTORY ${CMAKE_RUNTIME_OUTPUT_DIRECTORY})

+set(BUILD_SHARED_LIBS ON)

+

+# Check for IPO support

+include(CheckIPOSupported)

+check_ipo_supported(RESULT IPO_SUPPORTED OUTPUT IPO_ERROR)

+if (NOT IPO_SUPPORTED)

+ message(WARNING "Interprocedural optimization is not supported by your toolchain! This will lead to bigger file sizes and worse performance: ${IPO_ERROR}")

+else()

+ message(STATUS "Interprocedural optimization support detected")

+endif()

+

+set(DIRECTORY deps/llama.cpp-mainline)

+include(llama.cpp.cmake)

+

+set(BUILD_VARIANTS)

+if (APPLE)

+ list(APPEND BUILD_VARIANTS metal)

+endif()

+if (LLMODEL_KOMPUTE)

+ list(APPEND BUILD_VARIANTS kompute kompute-avxonly)

+else()

+ list(PREPEND BUILD_VARIANTS cpu cpu-avxonly)

+endif()

+if (LLMODEL_VULKAN)

+ list(APPEND BUILD_VARIANTS vulkan vulkan-avxonly)

+endif()

+if (LLMODEL_CUDA)

+ cmake_minimum_required(VERSION 3.18) # for CMAKE_CUDA_ARCHITECTURES

+

+ # Defaults must be set before enable_language(CUDA).

+ # Keep this in sync with the arch list in ggml/src/CMakeLists.txt (plus 5.0 for non-F16 branch).

+ if (NOT DEFINED CMAKE_CUDA_ARCHITECTURES)

+ # 52 == lowest CUDA 12 standard

+ # 60 == f16 CUDA intrinsics

+ # 61 == integer CUDA intrinsics

+ # 70 == compute capability at which unrolling a loop in mul_mat_q kernels is faster

+ if (GGML_CUDA_F16 OR GGML_CUDA_DMMV_F16)

+ set(CMAKE_CUDA_ARCHITECTURES "60;61;70;75") # needed for f16 CUDA intrinsics

+ else()

+ set(CMAKE_CUDA_ARCHITECTURES "50;52;61;70;75") # lowest CUDA 12 standard + lowest for integer intrinsics

+ #set(CMAKE_CUDA_ARCHITECTURES "OFF") # use this to compile much faster, but only F16 models work

+ endif()

+ endif()

+ message(STATUS "Using CUDA architectures: ${CMAKE_CUDA_ARCHITECTURES}")

+

+ include(CheckLanguage)

+ check_language(CUDA)

+ if (NOT CMAKE_CUDA_COMPILER)

+ message(WARNING "CUDA Toolkit not found. To build without CUDA, use -DLLMODEL_CUDA=OFF.")

+ endif()

+ enable_language(CUDA)

+ list(APPEND BUILD_VARIANTS cuda cuda-avxonly)

+endif()

+if (LLMODEL_ROCM)

+ enable_language(HIP)

+ list(APPEND BUILD_VARIANTS rocm rocm-avxonly)

+endif()

+

+# Go through each build variant

+foreach(BUILD_VARIANT IN LISTS BUILD_VARIANTS)

+ # Determine flags

+ if (BUILD_VARIANT MATCHES avxonly)

+ set(GPT4ALL_ALLOW_NON_AVX OFF)

+ else()

+ set(GPT4ALL_ALLOW_NON_AVX ON)

+ endif()

+ set(GGML_AVX2 ${GPT4ALL_ALLOW_NON_AVX})

+ set(GGML_F16C ${GPT4ALL_ALLOW_NON_AVX})

+ set(GGML_FMA ${GPT4ALL_ALLOW_NON_AVX})

+

+ set(GGML_METAL OFF)

+ set(GGML_KOMPUTE OFF)

+ set(GGML_VULKAN OFF)

+ set(GGML_CUDA OFF)

+ set(GGML_ROCM OFF)

+ if (BUILD_VARIANT MATCHES metal)

+ set(GGML_METAL ON)

+ elseif (BUILD_VARIANT MATCHES kompute)

+ set(GGML_KOMPUTE ON)

+ elseif (BUILD_VARIANT MATCHES vulkan)

+ set(GGML_VULKAN ON)

+ elseif (BUILD_VARIANT MATCHES cuda)

+ set(GGML_CUDA ON)

+ elseif (BUILD_VARIANT MATCHES rocm)

+ set(GGML_HIPBLAS ON)

+ endif()

+

+ # Include GGML

+ include_ggml(-mainline-${BUILD_VARIANT})

+

+ if (BUILD_VARIANT MATCHES metal)

+ set(GGML_METALLIB "${GGML_METALLIB}" PARENT_SCOPE)

+ endif()

+

+ # Function for preparing individual implementations

+ function(prepare_target TARGET_NAME BASE_LIB)

+ set(TARGET_NAME ${TARGET_NAME}-${BUILD_VARIANT})

+ message(STATUS "Configuring model implementation target ${TARGET_NAME}")

+ # Link to ggml/llama

+ target_link_libraries(${TARGET_NAME}

+ PRIVATE ${BASE_LIB}-${BUILD_VARIANT})

+ # Let it know about its build variant

+ target_compile_definitions(${TARGET_NAME}

+ PRIVATE GGML_BUILD_VARIANT="${BUILD_VARIANT}")

+ # Enable IPO if possible

+# FIXME: Doesn't work with msvc reliably. See https://github.com/nomic-ai/gpt4all/issues/841

+# set_property(TARGET ${TARGET_NAME}

+# PROPERTY INTERPROCEDURAL_OPTIMIZATION ${IPO_SUPPORTED})

+ endfunction()

+

+ # Add each individual implementations

+ add_library(llamamodel-mainline-${BUILD_VARIANT} SHARED

+ src/llamamodel.cpp src/llmodel_shared.cpp)

+ gpt4all_add_warning_options(llamamodel-mainline-${BUILD_VARIANT})

+ target_compile_definitions(llamamodel-mainline-${BUILD_VARIANT} PRIVATE

+ LLAMA_VERSIONS=>=3 LLAMA_DATE=999999)

+ target_include_directories(llamamodel-mainline-${BUILD_VARIANT} PRIVATE

+ src include/gpt4all-backend

+ )

+ prepare_target(llamamodel-mainline llama-mainline)

+

+ if (NOT PROJECT_IS_TOP_LEVEL AND BUILD_VARIANT STREQUAL cuda)

+ set(CUDAToolkit_BIN_DIR ${CUDAToolkit_BIN_DIR} PARENT_SCOPE)

+ endif()

+endforeach()

+

+add_library(llmodel

+ src/dlhandle.cpp

+ src/llmodel.cpp

+ src/llmodel_c.cpp

+ src/llmodel_shared.cpp

+)

+gpt4all_add_warning_options(llmodel)

+target_sources(llmodel PUBLIC

+ FILE_SET public_headers TYPE HEADERS BASE_DIRS include

+ FILES include/gpt4all-backend/llmodel.h

+ include/gpt4all-backend/llmodel_c.h

+ include/gpt4all-backend/sysinfo.h

+)

+target_compile_definitions(llmodel PRIVATE LIB_FILE_EXT="${CMAKE_SHARED_LIBRARY_SUFFIX}")

+target_include_directories(llmodel PRIVATE src include/gpt4all-backend)

+

+set_target_properties(llmodel PROPERTIES

+ VERSION ${PROJECT_VERSION}

+ SOVERSION ${PROJECT_VERSION_MAJOR})

+

+set(COMPONENT_NAME_MAIN ${PROJECT_NAME})

+set(CMAKE_INSTALL_PREFIX ${CMAKE_BINARY_DIR}/install)

diff --git a/gpt4all-backend/README.md b/gpt4all-backend-old/README.md

similarity index 100%

rename from gpt4all-backend/README.md

rename to gpt4all-backend-old/README.md

diff --git a/gpt4all-backend-old/deps/llama.cpp-mainline b/gpt4all-backend-old/deps/llama.cpp-mainline

new file mode 160000

index 000000000000..ea9ab6a30887

--- /dev/null

+++ b/gpt4all-backend-old/deps/llama.cpp-mainline

@@ -0,0 +1 @@

+Subproject commit ea9ab6a30887c04a4e304a2a33a31b5a152b0be8

diff --git a/gpt4all-backend/include/gpt4all-backend/llmodel.h b/gpt4all-backend-old/include/gpt4all-backend/llmodel.h

similarity index 100%

rename from gpt4all-backend/include/gpt4all-backend/llmodel.h

rename to gpt4all-backend-old/include/gpt4all-backend/llmodel.h

diff --git a/gpt4all-backend/include/gpt4all-backend/llmodel_c.h b/gpt4all-backend-old/include/gpt4all-backend/llmodel_c.h

similarity index 100%

rename from gpt4all-backend/include/gpt4all-backend/llmodel_c.h

rename to gpt4all-backend-old/include/gpt4all-backend/llmodel_c.h

diff --git a/gpt4all-backend/include/gpt4all-backend/sysinfo.h b/gpt4all-backend-old/include/gpt4all-backend/sysinfo.h

similarity index 100%

rename from gpt4all-backend/include/gpt4all-backend/sysinfo.h

rename to gpt4all-backend-old/include/gpt4all-backend/sysinfo.h

diff --git a/gpt4all-backend/llama.cpp.cmake b/gpt4all-backend-old/llama.cpp.cmake

similarity index 100%

rename from gpt4all-backend/llama.cpp.cmake

rename to gpt4all-backend-old/llama.cpp.cmake

diff --git a/gpt4all-backend/src/dlhandle.cpp b/gpt4all-backend-old/src/dlhandle.cpp

similarity index 100%

rename from gpt4all-backend/src/dlhandle.cpp

rename to gpt4all-backend-old/src/dlhandle.cpp

diff --git a/gpt4all-backend/src/dlhandle.h b/gpt4all-backend-old/src/dlhandle.h

similarity index 100%

rename from gpt4all-backend/src/dlhandle.h

rename to gpt4all-backend-old/src/dlhandle.h

diff --git a/gpt4all-backend/src/llamamodel.cpp b/gpt4all-backend-old/src/llamamodel.cpp

similarity index 100%

rename from gpt4all-backend/src/llamamodel.cpp

rename to gpt4all-backend-old/src/llamamodel.cpp

diff --git a/gpt4all-backend/src/llamamodel_impl.h b/gpt4all-backend-old/src/llamamodel_impl.h

similarity index 100%

rename from gpt4all-backend/src/llamamodel_impl.h

rename to gpt4all-backend-old/src/llamamodel_impl.h

diff --git a/gpt4all-backend/src/llmodel.cpp b/gpt4all-backend-old/src/llmodel.cpp

similarity index 100%

rename from gpt4all-backend/src/llmodel.cpp

rename to gpt4all-backend-old/src/llmodel.cpp

diff --git a/gpt4all-backend/src/llmodel_c.cpp b/gpt4all-backend-old/src/llmodel_c.cpp

similarity index 100%

rename from gpt4all-backend/src/llmodel_c.cpp

rename to gpt4all-backend-old/src/llmodel_c.cpp

diff --git a/gpt4all-backend/src/llmodel_shared.cpp b/gpt4all-backend-old/src/llmodel_shared.cpp

similarity index 100%

rename from gpt4all-backend/src/llmodel_shared.cpp

rename to gpt4all-backend-old/src/llmodel_shared.cpp

diff --git a/gpt4all-backend/src/utils.h b/gpt4all-backend-old/src/utils.h

similarity index 100%

rename from gpt4all-backend/src/utils.h

rename to gpt4all-backend-old/src/utils.h

diff --git a/gpt4all-backend-test/CMakeLists.txt b/gpt4all-backend-test/CMakeLists.txt

new file mode 100644

index 000000000000..2647bc94fff5

--- /dev/null

+++ b/gpt4all-backend-test/CMakeLists.txt

@@ -0,0 +1,10 @@

+cmake_minimum_required(VERSION 3.28...3.31)

+project(gpt4all-backend-test VERSION 0.1 LANGUAGES CXX)

+

+set(G4A_TEST_OLLAMA_URL "http://localhost:11434/" CACHE STRING "The base URL of the Ollama server to use.")

+

+set(CMAKE_RUNTIME_OUTPUT_DIRECTORY "${CMAKE_BINARY_DIR}/bin")

+include(../common/common.cmake)

+

+add_subdirectory(../gpt4all-backend gpt4all-backend)

+add_subdirectory(src)

diff --git a/gpt4all-backend-test/src/CMakeLists.txt b/gpt4all-backend-test/src/CMakeLists.txt

new file mode 100644

index 000000000000..932921a3cc16

--- /dev/null

+++ b/gpt4all-backend-test/src/CMakeLists.txt

@@ -0,0 +1,15 @@

+set(TARGET test-backend)

+

+configure_file(config.h.in "${CMAKE_CURRENT_BINARY_DIR}/include/config.h")

+

+add_executable(${TARGET}

+ main.cpp

+)

+target_compile_features(${TARGET} PUBLIC cxx_std_23)

+target_include_directories(${TARGET} PRIVATE

+ "${CMAKE_CURRENT_BINARY_DIR}/include"

+)

+gpt4all_add_warning_options(${TARGET})

+target_link_libraries(${TARGET} PRIVATE

+ gpt4all-backend

+)

diff --git a/gpt4all-backend-test/src/config.h.in b/gpt4all-backend-test/src/config.h.in

new file mode 100644

index 000000000000..45f97af5118c

--- /dev/null

+++ b/gpt4all-backend-test/src/config.h.in

@@ -0,0 +1,6 @@

+#pragma once

+

+#include

+

+

+inline const QString OLLAMA_URL = QStringLiteral("@G4A_TEST_OLLAMA_URL@");

diff --git a/gpt4all-backend-test/src/main.cpp b/gpt4all-backend-test/src/main.cpp

new file mode 100644

index 000000000000..130d3b4a53ae

--- /dev/null

+++ b/gpt4all-backend-test/src/main.cpp

@@ -0,0 +1,70 @@

+#include "config.h"

+

+#include "pretty.h"

+

+#include // IWYU pragma: keep

+#include

+#include

+#include // IWYU pragma: keep

+#include

+

+#include

+#include

+#include

+#include

+

+#include

+#include

+#include

+

+namespace json = boost::json;

+using namespace Qt::Literals::StringLiterals;

+using gpt4all::backend::OllamaClient;

+

+

+template

+static std::string to_json(const T &value)

+{ return pretty_print(json::value_from(value)); }

+

+static void run()

+{

+ fmt::print("Connecting to server at {}\n", OLLAMA_URL);

+ OllamaClient provider(OLLAMA_URL);

+

+ auto versionResp = QCoro::waitFor(provider.version());

+ if (versionResp) {

+ fmt::print("Version response: {}\n", to_json(*versionResp));

+ } else {

+ fmt::print("Error retrieving version: {}\n", versionResp.error().errorString);

+ return QCoreApplication::exit(1);

+ }

+

+ auto modelsResponse = QCoro::waitFor(provider.list());

+ if (modelsResponse) {

+ fmt::print("Available models:\n");

+ for (const auto & model : modelsResponse->models)

+ fmt::print("{}\n", model.model);

+ if (!modelsResponse->models.empty())

+ fmt::print("First model: {}\n", to_json(modelsResponse->models.front()));

+ } else {

+ fmt::print("Error retrieving available models: {}\n", modelsResponse.error().errorString);

+ return QCoreApplication::exit(1);

+ }

+

+ auto showResponse = QCoro::waitFor(provider.show({ .model = "DeepSeek-R1-Distill-Llama-70B-Q4_K_S" }));

+ if (showResponse) {

+ fmt::print("Show response: {}\n", to_json(*showResponse));

+ } else {

+ fmt::print("Error retrieving model info: {}\n", showResponse.error().errorString);

+ return QCoreApplication::exit(1);

+ }

+

+ QCoreApplication::exit(0);

+}

+

+int main(int argc, char *argv[])

+{

+ QCoreApplication app(argc, argv);

+ QTimer::singleShot(0, &run);

+ return app.exec();

+}

diff --git a/gpt4all-backend-test/src/pretty.h b/gpt4all-backend-test/src/pretty.h

new file mode 100644

index 000000000000..cd817caf77b0

--- /dev/null

+++ b/gpt4all-backend-test/src/pretty.h

@@ -0,0 +1,95 @@

+#pragma once

+

+#include

+

+#include

+#include

+

+

+inline void pretty_print( std::ostream& os, boost::json::value const& jv, std::string* indent = nullptr )

+{

+ std::string indent_;

+ if(! indent)

+ indent = &indent_;

+ switch(jv.kind())

+ {

+ case boost::json::kind::object:

+ {

+ os << "{\n";

+ indent->append(4, ' ');

+ auto const& obj = jv.get_object();

+ if(! obj.empty())

+ {

+ auto it = obj.begin();

+ for(;;)

+ {

+ os << *indent << boost::json::serialize(it->key()) << ": ";

+ pretty_print(os, it->value(), indent);

+ if(++it == obj.end())

+ break;

+ os << ",\n";

+ }

+ }

+ os << "\n";

+ indent->resize(indent->size() - 4);

+ os << *indent << "}";

+ break;

+ }

+

+ case boost::json::kind::array:

+ {

+ os << "[\n";

+ indent->append(4, ' ');

+ auto const& arr = jv.get_array();

+ if(! arr.empty())

+ {

+ auto it = arr.begin();

+ for(;;)

+ {

+ os << *indent;

+ pretty_print( os, *it, indent);

+ if(++it == arr.end())

+ break;

+ os << ",\n";

+ }

+ }

+ os << "\n";

+ indent->resize(indent->size() - 4);

+ os << *indent << "]";

+ break;

+ }

+

+ case boost::json::kind::string:

+ {

+ os << boost::json::serialize(jv.get_string());

+ break;

+ }

+

+ case boost::json::kind::uint64:

+ case boost::json::kind::int64:

+ case boost::json::kind::double_:

+ os << jv;

+ break;

+

+ case boost::json::kind::bool_:

+ if(jv.get_bool())

+ os << "true";

+ else

+ os << "false";

+ break;

+

+ case boost::json::kind::null:

+ os << "null";

+ break;

+ }

+

+ if(indent->empty())

+ os << "\n";

+}

+

+inline std::string pretty_print( boost::json::value const& jv, std::string* indent = nullptr )

+{

+ std::ostringstream ss;

+ pretty_print(ss, jv, indent);

+ return ss.str();

+}

diff --git a/gpt4all-backend/CMakeLists.txt b/gpt4all-backend/CMakeLists.txt

index 91d314f7a017..10077efdceb7 100644

--- a/gpt4all-backend/CMakeLists.txt

+++ b/gpt4all-backend/CMakeLists.txt

@@ -1,189 +1,22 @@

-cmake_minimum_required(VERSION 3.23) # for FILE_SET

+cmake_minimum_required(VERSION 3.28...3.31)

+project(gpt4all-backend VERSION 0.1 LANGUAGES CXX)

+set(CMAKE_CXX_STANDARD 23) # make sure fmt is compiled with the same C++ version as us

include(../common/common.cmake)

-set(CMAKE_WINDOWS_EXPORT_ALL_SYMBOLS ON)

-set(CMAKE_EXPORT_COMPILE_COMMANDS ON)

-

-if (APPLE)

- option(BUILD_UNIVERSAL "Build a Universal binary on macOS" ON)

-else()

- option(LLMODEL_KOMPUTE "llmodel: use Kompute" ON)

- option(LLMODEL_VULKAN "llmodel: use Vulkan" OFF)

- option(LLMODEL_CUDA "llmodel: use CUDA" ON)

- option(LLMODEL_ROCM "llmodel: use ROCm" OFF)

-endif()

-

-if (APPLE)

- if (BUILD_UNIVERSAL)

- # Build a Universal binary on macOS

- # This requires that the found Qt library is compiled as Universal binaries.

- set(CMAKE_OSX_ARCHITECTURES "arm64;x86_64" CACHE STRING "" FORCE)

- else()

- # Build for the host architecture on macOS

- if (NOT CMAKE_OSX_ARCHITECTURES)

- set(CMAKE_OSX_ARCHITECTURES "${CMAKE_HOST_SYSTEM_PROCESSOR}" CACHE STRING "" FORCE)

- endif()

- endif()

-endif()

-

-# Include the binary directory for the generated header file

-include_directories("${CMAKE_CURRENT_BINARY_DIR}")

-

-set(LLMODEL_VERSION_MAJOR 0)

-set(LLMODEL_VERSION_MINOR 5)

-set(LLMODEL_VERSION_PATCH 0)

-set(LLMODEL_VERSION "${LLMODEL_VERSION_MAJOR}.${LLMODEL_VERSION_MINOR}.${LLMODEL_VERSION_PATCH}")

-project(llmodel VERSION ${LLMODEL_VERSION} LANGUAGES CXX C)

-

-set(CMAKE_CXX_STANDARD 23)

-set(CMAKE_CXX_STANDARD_REQUIRED ON)

-set(CMAKE_LIBRARY_OUTPUT_DIRECTORY ${CMAKE_RUNTIME_OUTPUT_DIRECTORY})

-set(BUILD_SHARED_LIBS ON)

-

-# Check for IPO support

-include(CheckIPOSupported)

-check_ipo_supported(RESULT IPO_SUPPORTED OUTPUT IPO_ERROR)

-if (NOT IPO_SUPPORTED)

- message(WARNING "Interprocedural optimization is not supported by your toolchain! This will lead to bigger file sizes and worse performance: ${IPO_ERROR}")

-else()

- message(STATUS "Interprocedural optimization support detected")

-endif()

-

-set(DIRECTORY deps/llama.cpp-mainline)

-include(llama.cpp.cmake)

-

-set(BUILD_VARIANTS)

-if (APPLE)

- list(APPEND BUILD_VARIANTS metal)

-endif()

-if (LLMODEL_KOMPUTE)

- list(APPEND BUILD_VARIANTS kompute kompute-avxonly)

-else()

- list(PREPEND BUILD_VARIANTS cpu cpu-avxonly)

-endif()

-if (LLMODEL_VULKAN)

- list(APPEND BUILD_VARIANTS vulkan vulkan-avxonly)

-endif()

-if (LLMODEL_CUDA)

- cmake_minimum_required(VERSION 3.18) # for CMAKE_CUDA_ARCHITECTURES

-

- # Defaults must be set before enable_language(CUDA).

- # Keep this in sync with the arch list in ggml/src/CMakeLists.txt (plus 5.0 for non-F16 branch).

- if (NOT DEFINED CMAKE_CUDA_ARCHITECTURES)

- # 52 == lowest CUDA 12 standard

- # 60 == f16 CUDA intrinsics

- # 61 == integer CUDA intrinsics

- # 70 == compute capability at which unrolling a loop in mul_mat_q kernels is faster

- if (GGML_CUDA_F16 OR GGML_CUDA_DMMV_F16)

- set(CMAKE_CUDA_ARCHITECTURES "60;61;70;75") # needed for f16 CUDA intrinsics

- else()

- set(CMAKE_CUDA_ARCHITECTURES "50;52;61;70;75") # lowest CUDA 12 standard + lowest for integer intrinsics

- #set(CMAKE_CUDA_ARCHITECTURES "OFF") # use this to compile much faster, but only F16 models work

- endif()

- endif()

- message(STATUS "Using CUDA architectures: ${CMAKE_CUDA_ARCHITECTURES}")

-

- include(CheckLanguage)

- check_language(CUDA)

- if (NOT CMAKE_CUDA_COMPILER)

- message(WARNING "CUDA Toolkit not found. To build without CUDA, use -DLLMODEL_CUDA=OFF.")

- endif()

- enable_language(CUDA)

- list(APPEND BUILD_VARIANTS cuda cuda-avxonly)

-endif()

-if (LLMODEL_ROCM)

- enable_language(HIP)

- list(APPEND BUILD_VARIANTS rocm rocm-avxonly)

-endif()

-

-# Go through each build variant

-foreach(BUILD_VARIANT IN LISTS BUILD_VARIANTS)

- # Determine flags

- if (BUILD_VARIANT MATCHES avxonly)

- set(GPT4ALL_ALLOW_NON_AVX OFF)

- else()

- set(GPT4ALL_ALLOW_NON_AVX ON)

- endif()

- set(GGML_AVX2 ${GPT4ALL_ALLOW_NON_AVX})

- set(GGML_F16C ${GPT4ALL_ALLOW_NON_AVX})

- set(GGML_FMA ${GPT4ALL_ALLOW_NON_AVX})

-

- set(GGML_METAL OFF)

- set(GGML_KOMPUTE OFF)

- set(GGML_VULKAN OFF)

- set(GGML_CUDA OFF)

- set(GGML_ROCM OFF)

- if (BUILD_VARIANT MATCHES metal)

- set(GGML_METAL ON)

- elseif (BUILD_VARIANT MATCHES kompute)

- set(GGML_KOMPUTE ON)

- elseif (BUILD_VARIANT MATCHES vulkan)

- set(GGML_VULKAN ON)

- elseif (BUILD_VARIANT MATCHES cuda)

- set(GGML_CUDA ON)

- elseif (BUILD_VARIANT MATCHES rocm)

- set(GGML_HIPBLAS ON)

- endif()

-

- # Include GGML

- include_ggml(-mainline-${BUILD_VARIANT})

-

- if (BUILD_VARIANT MATCHES metal)

- set(GGML_METALLIB "${GGML_METALLIB}" PARENT_SCOPE)

- endif()

-

- # Function for preparing individual implementations

- function(prepare_target TARGET_NAME BASE_LIB)

- set(TARGET_NAME ${TARGET_NAME}-${BUILD_VARIANT})

- message(STATUS "Configuring model implementation target ${TARGET_NAME}")

- # Link to ggml/llama

- target_link_libraries(${TARGET_NAME}

- PRIVATE ${BASE_LIB}-${BUILD_VARIANT})

- # Let it know about its build variant

- target_compile_definitions(${TARGET_NAME}

- PRIVATE GGML_BUILD_VARIANT="${BUILD_VARIANT}")

- # Enable IPO if possible

-# FIXME: Doesn't work with msvc reliably. See https://github.com/nomic-ai/gpt4all/issues/841

-# set_property(TARGET ${TARGET_NAME}

-# PROPERTY INTERPROCEDURAL_OPTIMIZATION ${IPO_SUPPORTED})

- endfunction()

-

- # Add each individual implementations

- add_library(llamamodel-mainline-${BUILD_VARIANT} SHARED

- src/llamamodel.cpp src/llmodel_shared.cpp)

- gpt4all_add_warning_options(llamamodel-mainline-${BUILD_VARIANT})

- target_compile_definitions(llamamodel-mainline-${BUILD_VARIANT} PRIVATE

- LLAMA_VERSIONS=>=3 LLAMA_DATE=999999)

- target_include_directories(llamamodel-mainline-${BUILD_VARIANT} PRIVATE

- src include/gpt4all-backend

- )

- prepare_target(llamamodel-mainline llama-mainline)

-

- if (NOT PROJECT_IS_TOP_LEVEL AND BUILD_VARIANT STREQUAL cuda)

- set(CUDAToolkit_BIN_DIR ${CUDAToolkit_BIN_DIR} PARENT_SCOPE)

- endif()

-endforeach()

-

-add_library(llmodel

- src/dlhandle.cpp

- src/llmodel.cpp

- src/llmodel_c.cpp

- src/llmodel_shared.cpp

-)

-gpt4all_add_warning_options(llmodel)

-target_sources(llmodel PUBLIC

- FILE_SET public_headers TYPE HEADERS BASE_DIRS include

- FILES include/gpt4all-backend/llmodel.h

- include/gpt4all-backend/llmodel_c.h

- include/gpt4all-backend/sysinfo.h

+find_package(Qt6 6.8 COMPONENTS Concurrent Core Network REQUIRED)

+

+add_subdirectory(../deps common_deps)

+add_subdirectory(deps)

+add_subdirectory(src)

+

+target_sources(gpt4all-backend PUBLIC

+ FILE_SET public_headers TYPE HEADERS BASE_DIRS include FILES