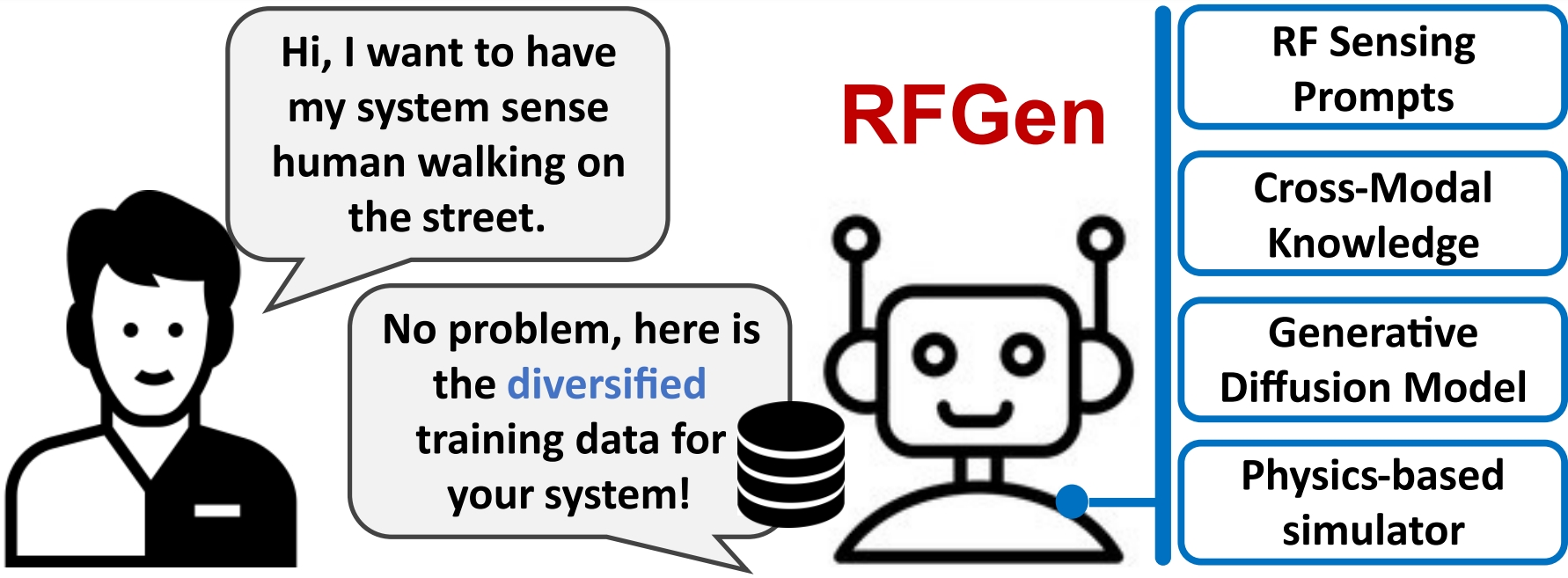

The offical implementation of RF Genesis: Zero-Shot Generalization of mmWave Sensing through Simulation-Based Data Synthesis and Generative Diffusion Models.

Xingyu Chen, Xinyu Zhang, UC San Diego.

Sorry for the long wait — the RFLoRA model is finally released! Feel free to try it out.

Please note that RFLoRA was a workaround developed back in 2023, before 3D diffusion models became available. In 2025, I’ll be adding support for 3D diffusion models for indoor room generation.

Also, I’ve noticed that some dependencies, including MDM and Mitsuba, have updated their APIs. I’ll start maintaining this project again while also preparing for RFGenV2!

📢 June/25 - RFLoRA model released, trained under 20k images!

📢 June/25 - Experimental CUDA kernel for signal generation, reduce memory usage by 1000X!

📢 22/Jan/24 - Initial Release of RF Genesis!

📢 29/March/24 - Added the code for point-cloud processing and visualization.

- New Replace Mitsuba with custom rayTracing engines.

- New 3D Diffusion including indoor environments.

- More documentations.

This code was tested on Ubuntu 20.04.5 LTS and requires:

- Python 3.10

- conda3 or miniconda3

- CUDA capable GPU (one is enough)

Clone the repository

git clone https://github.com/Asixa/RF-Genesis.git

cd RF-Genesis

Create a conda environment.

conda create -n rfgen python=3.10 -y

conda activate rfgen

Install python packages

pip install -r requirements.txt

sh setup.sh

Run a simple example.

python run.py -o "a person walking back and forth" -e "a living room" -n "hello_rfgen"

Optional Command:

Skiping visualization rendering

--no-visualize

Skiping environmental diffusion

--no-environment

from diffusers import StableDiffusionPipeline

import torch

import matplotlib.pyplot as plt

import numpy as np

# Load model

pipe = StableDiffusionPipeline.from_pretrained("darkstorm2150/Protogen_x5.3_Official_Release",

torch_dtype=torch.float16,

safety_checker=None,

).to("cuda")

pipe.load_lora_weights("Asixa/RFLoRA")

prompt = "a living room with a table, a chair, a TV, a computer, a lamp, a plant, a window, a door"

image = pipe(prompt, num_inference_steps=25).images[0]

plt.imshow(image)

Rendered SMPL animation and radar point clouds.

The current simulation is based on the model of Texas Instruments AWR 1843 radar, with 3TX 4RX MIMO setup.

The radar configuration is shown in TI1843.json and it can be freely adjusted.

@inproceedings{chen2023rfgenesis,

author = {Chen, Xingyu and Zhang, Xinyu},

title = {RF Genesis: Zero-Shot Generalization of mmWave Sensing through Simulation-Based Data Synthesis and Generative Diffusion Models},

booktitle = {ACM Conference on Embedded Networked Sensor Systems (SenSys ’23)},

year = {2023},

pages = {1-14},

address = {Istanbul, Turkiye},

publisher = {ACM, New York, NY, USA},

url = {https://doi.org/10.1145/3625687.3625798},

doi = {10.1145/3625687.3625798}

}

This code is distributed under an MIT LICENSE. Note that our code depends on other libraries, including CLIP, SMPL, MDM, mmMesh and uses datasets that each have their own respective licenses that must also be followed.