awesome for your to collections

based on: 总结当下可用的大模型LLMs

| Model | 作者 | Size | 类型 | 开源? |

|---|---|---|---|---|

| LLaMa | Meta AI | 7B-65B | Decoder | open |

| OPT | Meta AI | 125M-175B | Decoder | open |

| T5 | 220M-11B | Encoder-Decoder | open | |

| mT5 | 235M-13B | Encoder-Decoder | open | |

| UL2 | 20B | Encoder-Decoder | open | |

| PaLM | 540B | Decoder | no | |

| LaMDA | 2B-137B | Decoder | no | |

| FLAN-T5 | 同T5 | Encoder-Decoder | open | |

| FLAN-UL2 | 同U2 | Encoder-Decoder | open | |

| FLAN-PaLM | 同PaLM | Decoder | no | |

| FLAN | 同LaMDA | Decoder | no | |

| BLOOM | BigScience | 176B | Decoder | open |

| T0 | BigScience | 3B | Decoder | open |

| BLOOMZ | BigScience | 同BLOOM | Decoder | open |

| mT0 | BigScience | 同T0 | Decoder | open |

| GPT-Neo | EleutherAI | 125M-2.7B | Decoder | open |

| GPT-NeoX | EleutherAI | 20B | Decoder | open |

| GPT3 | OpenAI | 175B (davinci) | Decoder | no |

| GPT4 | OpenAI | unknown | OpenAI | no |

| InstructGPT | OpenAI | 1.3B | Decoder | no |

| Alpaca | Stanford | 同LLaMa | Decoder | open |

| ChatGLM-6B | Tsinghua University | 6B | Decoder | open |

| GLM-130B | Tsinghua University | 130B | Decoder | open |

based on 总结开源可用的Instruct/Prompt Tuning数据

| 数据集/项目名称 | 组织/作者 | 简介 |

|---|---|---|

| Natural Instruction / Super-Natural Instruction | Allen AI | 包含61个NLP任务(Natural Instruction)和1600个NLP任务(Super-Natural Instruction)的指令数据 |

| PromptSource / P3 | BigScience | 包含270个NLP任务的2000多个prompt模版(PromptSource)和规模在100M-1B之间的P3数据集 |

| xMTF | BigScience | 包含13个NLP任务、46种语言的多语言prompt数据 |

| HH-RLHF | Anthropic | 旨在训练Helpful and Harmless(HH)的LLMs的RLHF数据集 |

| Unnatural Instruction | orhonovich | 使用GPT3生成64k的instruction prompt数据,经改写后得到240k条instruction数据 |

| Self-Instruct | yizhongw | 使用LLMs生成prompt进行instruct-tuning的方法,引入Task pool和Quality filtering等概念 |

| UnifiedSKG | HKU | 在Text-to-Text框架中加入knowledge grounding,将结构化数据序列化并嵌入到prompt中 |

| Flan Collection | 将Flan 2021数据与一些开源的instruction数据(P3,super-natural instruction等)进行合并 | |

| InstructDial | prakharguptaz | 在特定的一种任务类型(对话指令)上进行指令微调的尝试 |

| Alpaca-LoRA | tloen | Low-Rank LLaMA Instruct-Tuning |

- Prompt Engineering Guide - Guides, papers, lecture, and resources for prompt engineering

- LangChain - ⚡ Building applications with LLMs through composability ⚡

- ChatLangChain is an implementation of a locally hosted chatbot specifically focused on question answering over the LangChain documentation. Built with LangChain and FastAPI.

- Langflow - LangFlow is a GUI for LangChain, designed with react-flow to provide an effortless way to experiment and prototype flows with drag-and-drop components and a chat box.

- [Alpaca]

- Alpaca-LoRA - Low-Rank LLaMA Instruct-Tuning, Instruct-tune LLaMA on consumer hardware .

- Alpaca-LoRA as a Chatbot Service

- AlpacaDataCleaned

- BELLE - Bloom-Enhanced Large Language model Engine,针对 Stanford Alpaca 中文做了优化,模型调优仅使用由ChatGPT生产的数据(不包含任何其他数据)。

- https://github.com/LC1332/Chinese-alpaca-lora - A Chinese finetuned instruction LLaMA.

- Dolly - fine-tunes the GPT-J 6B model on the Alpaca dataset using a Databricks notebook.

- ChatRWKV - ChatRWKV is like ChatGPT but powered by RWKV (100% RNN) language model, and open source.

- [GPT-j]

- PEFT - Parameter-Efficient Fine-Tuning (PEFT) methods enable efficient adaptation of pre-trained language models (PLMs) to various downstream applications without fine-tuning all the model's parameters.

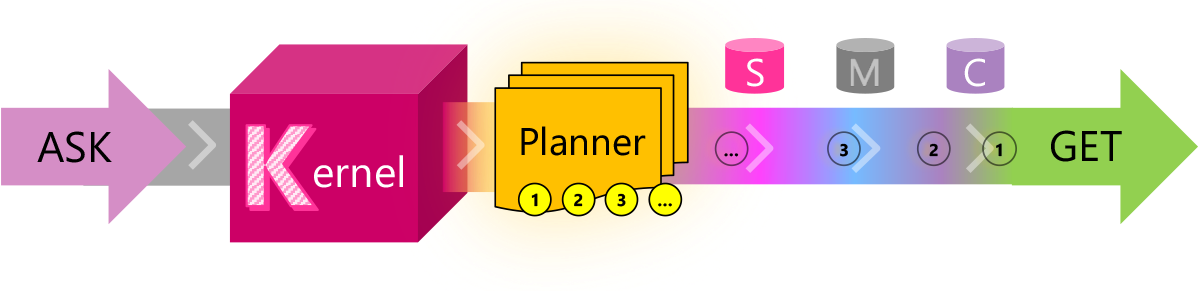

Semantic Kernel is designed to support and encapsulate several design patterns from the latest in AI research, such that developers can infuse their applications with complex skills like prompt chaining, recursive reasoning, summarization, zero/few-shot learning, contextual memory, long-term memory, embeddings, semantic indexing, planning, and accessing external knowledge stores as well as your own data.

- MathPrompter: Mathematical Reasoning using Large Language Models